Digesting NVIDIA Physical AI and Robotics Day Summary(2/8)

Session2: Accelerating Next Generation Chip Design with Agentic AI and GPU

This is my continued post on NVIDIA GTC, Physical AI and Robotics day. Let’s dive into the second session, Accelerating Next Generation Chip Design with Agentic AI and GPUs by Rambo Jacoby.

For those tl;dr -

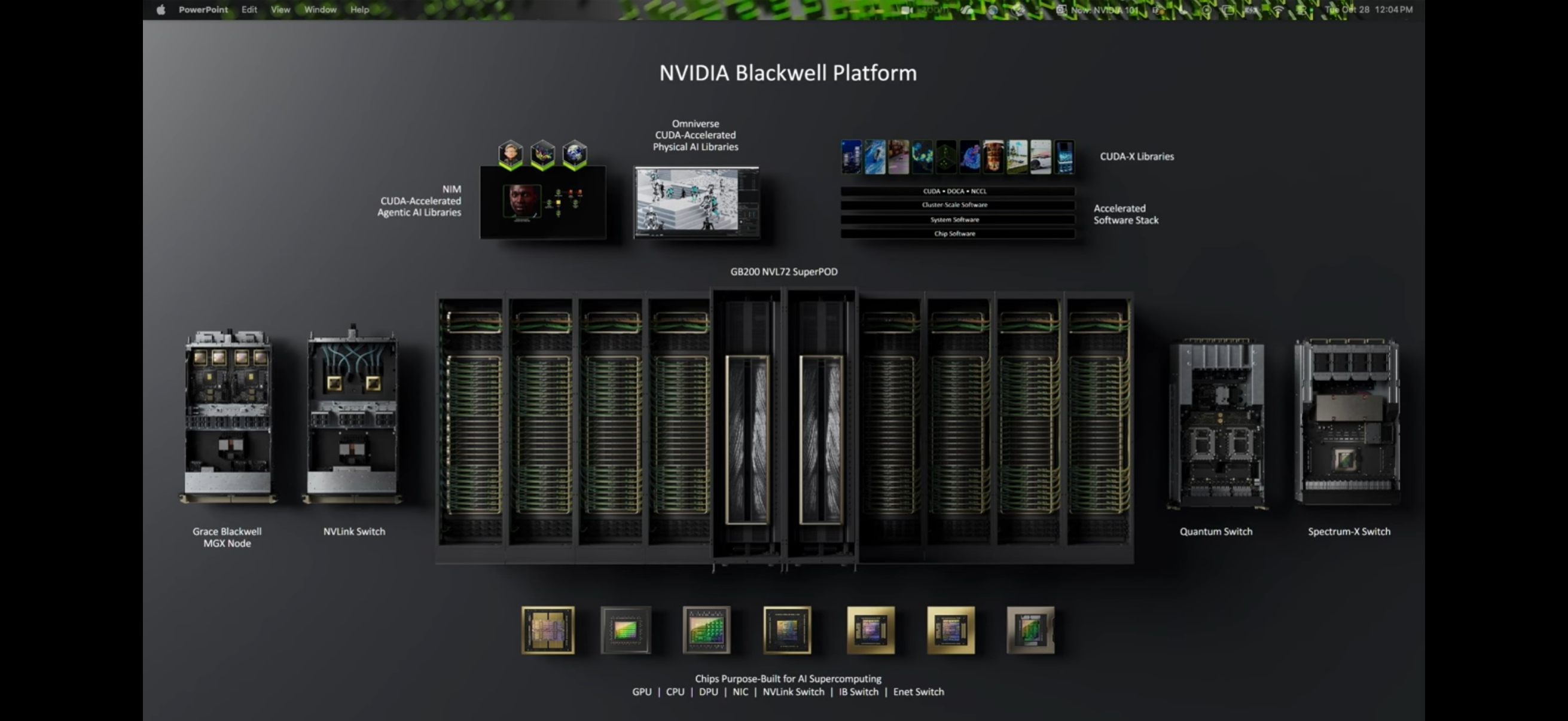

- NVIDIA as a Platform Company: NVIDIA is no longer just a “chip company” but a systems and platform provider. They control the entire stack—including GPUs, CPUs (Grace), networking, and software—to optimize for both performance and power efficiency

- Hardware Innovations: the Blackwell B100 GPU, which features over 200 billion transistors and 15,000 cores for massive parallel processing. Also, the GB300 Super Chip, highlighting its ARM-based Grace CPU, which offers double the performance of traditional x86 CPUs at half the power

- Software & Foundational Models: NVIDIA hosts open-source foundational models at build.nvidia.com. Enterprises can build AI agents while maintaining full ownership of their intellectual property

What I think this implies:

NVIDIA is also placing bets on “open source” AI usage growth. It is interesting to see NVIDIA hosting a number of non-US open weight models that are predominantly Chinese(Deepseek, Kimi, Qwen etc.)

Given the recent success of Google’s “Gemini+TPU” approach, NVIDIA would need to sharpen its strategy to expand its influence for long-tail clients within the AI ecosystem.

In 2025, we have witnessed US-based closed model developers (LLM labs) becoming more like product companies, threatening AI application developers.

Korean market angle: Talking to a number of Korean AI applications & solutions startups this year, I have learned that most developers have implemented some form of open-weight models in their stack. Especially Qwen, being the most used open source model.

Going forward, I believe we will see more Korean startups following this trend for several reasons :

Non-existent(or low presence) local Korean LLM models/ecosystems

Lower willingness to pay among customers compared to larger markets. thus larger need for saving costs

Low cloud adoption rate. Open-weight models are easier to deploy on a pre-existing on-premise infrastructure.

Improvement of performance for smaller Asian languages(especially Korean)

Full Presentation

- The presenter is Rambo Jacoby, Principal Product Manager at NVIDIA, specifically focusing on Autonomous Vehicle (AV) infrastructure and enterprise AI applications

- NVIDIA as a Platform: NVIDIA builds & controls every aspect of the AI acceleration system. NVIDIA is a systems company

- Blackwell Chip: GPU is a Parallel Computer. The Blackwell B100 chip consists of 200billion transistors, 15,000 cores. It took NVIDIA $10B to build this chip. But is NVIDIA a GPU company? NO, NVIDIA is a platform company

- GB300 Super Chip: ARM-based Grace CPU which offers double the performance of traditional x86 CPUs at half the power

- NV Link Rack: NVL 72 racks are linked up 36 GB300 chips → this means total 72 B100 chips goes into 1 rack. all networking solutions are provided by NVIDIA

- How much performance per watt has improved: 7years ago, the Summit data center(weather forecast purposes, 120peta flops, 27,000 GPUs, 6,000sqft data center, operating at 13 mega watts)→ Now a super computer built by NVIDIA with 2x performance (300peta flops, 1sq meter, 120kwatts) performance per power is 1/100

- NVIDIA build(build.nvidia.com): NVIDIA hosts open models, helps enterprises build AI agents while maintaining full ownership of their intellectual property

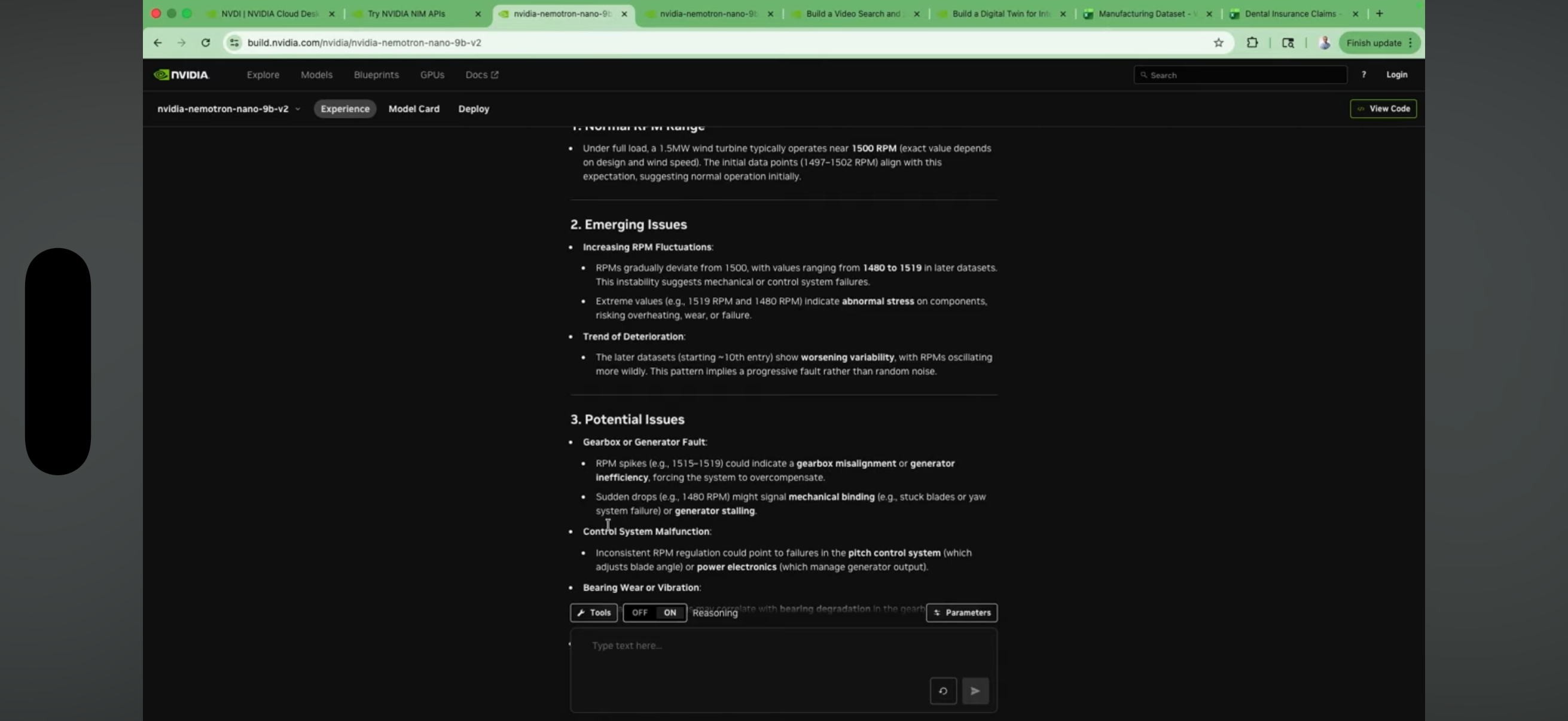

- Predictive Maintenance example: A reasoning model analyzes wind turbine data to detect potential gearbox issues or ice accumulation before a failure occurs

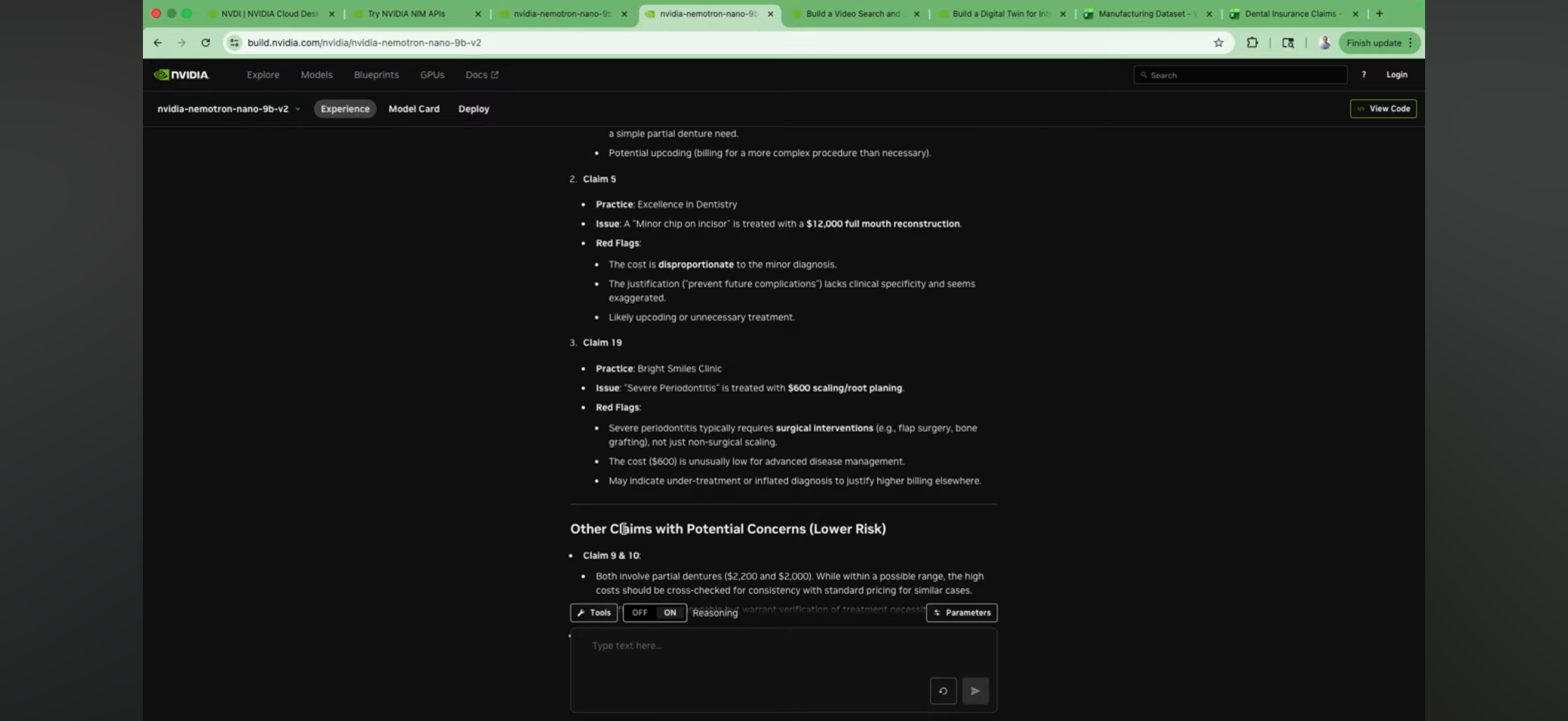

- Fraud Detection example: The AI identifies fraudulent dental insurance claims by comparing diagnoses with the typical costs of suggested treatments

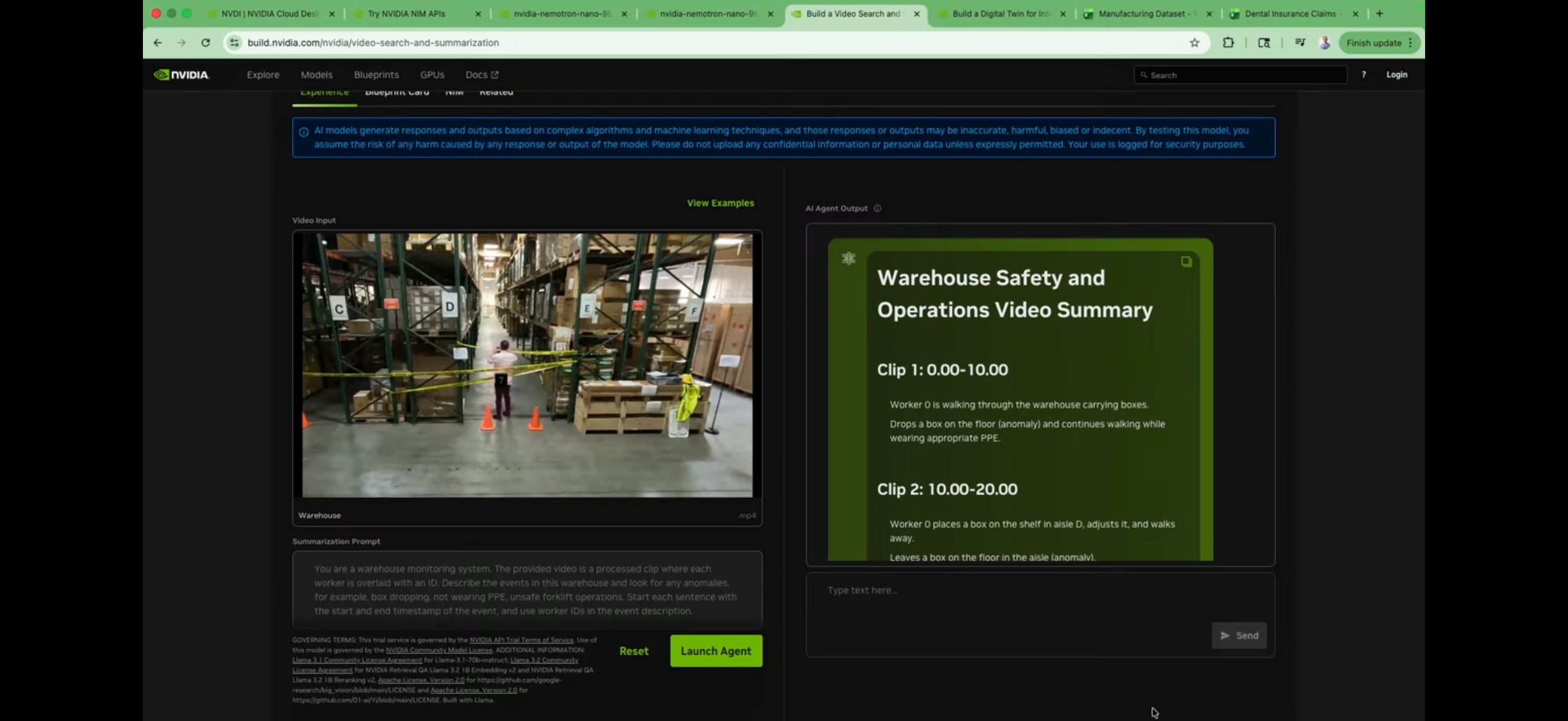

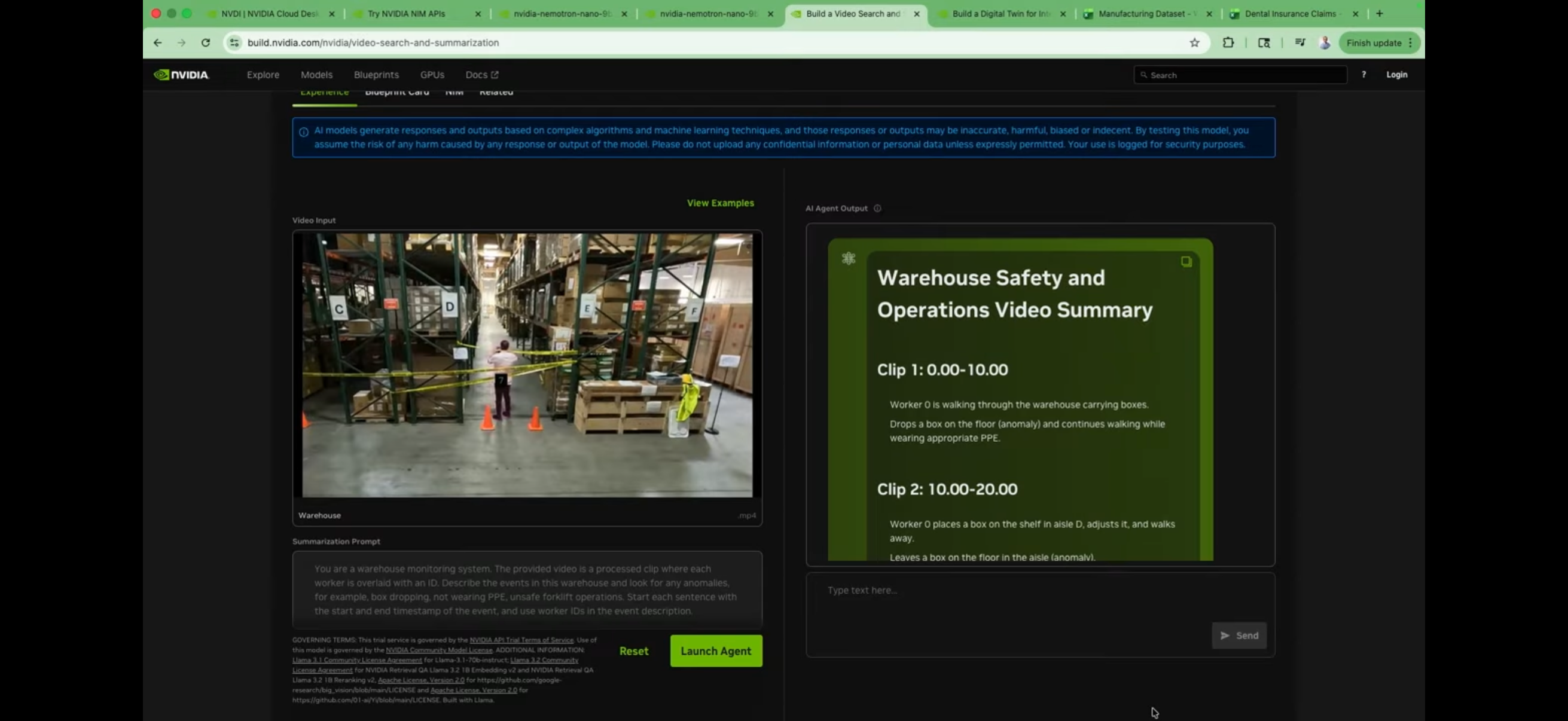

- Video Search & Summarization (VSS): An engine that watches security footage in real-time to detect anomalies, such as dropped inventory or safety violations in restricted areas

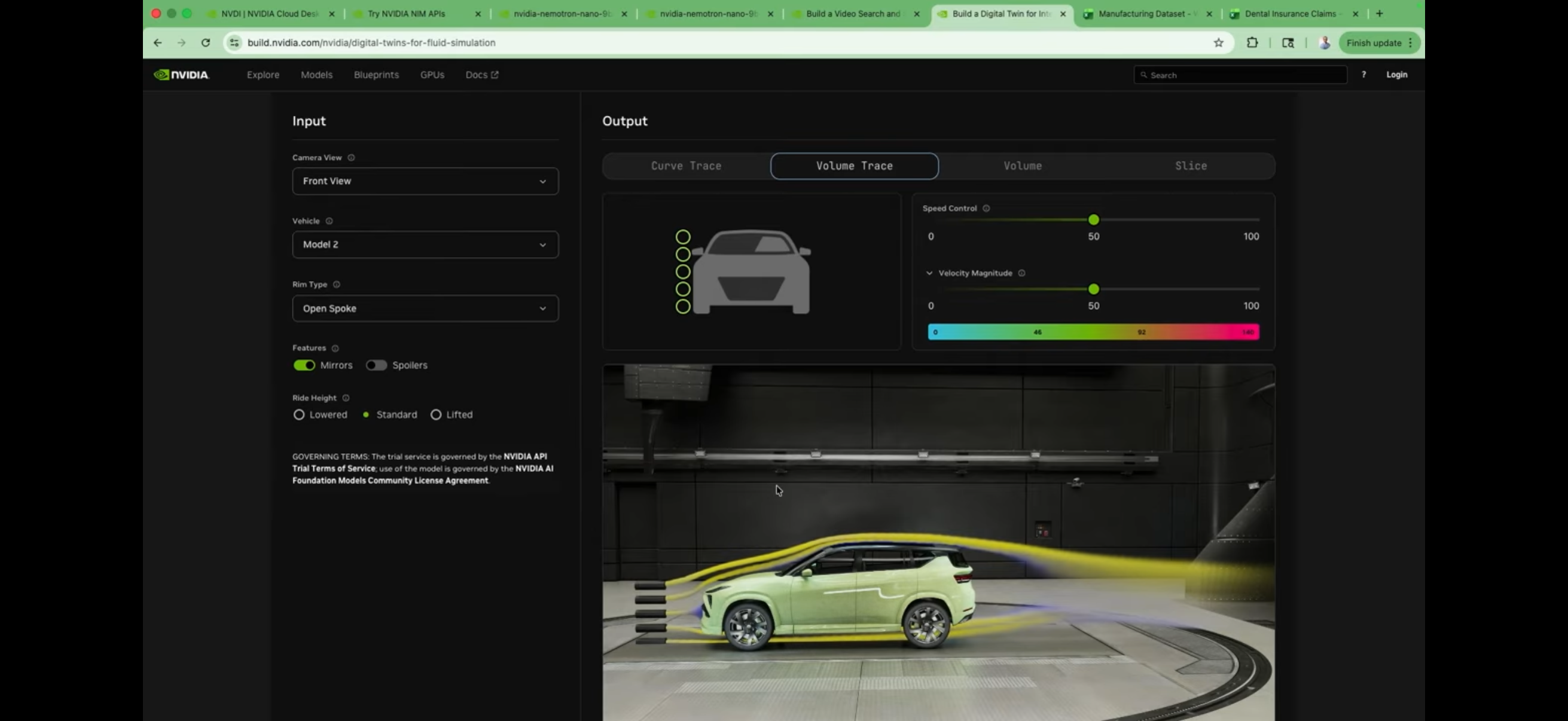

- Physics models for Physical AI : The presentation delves into Physical AI, using Physics Nemo to simulate aerodynamics in real-time

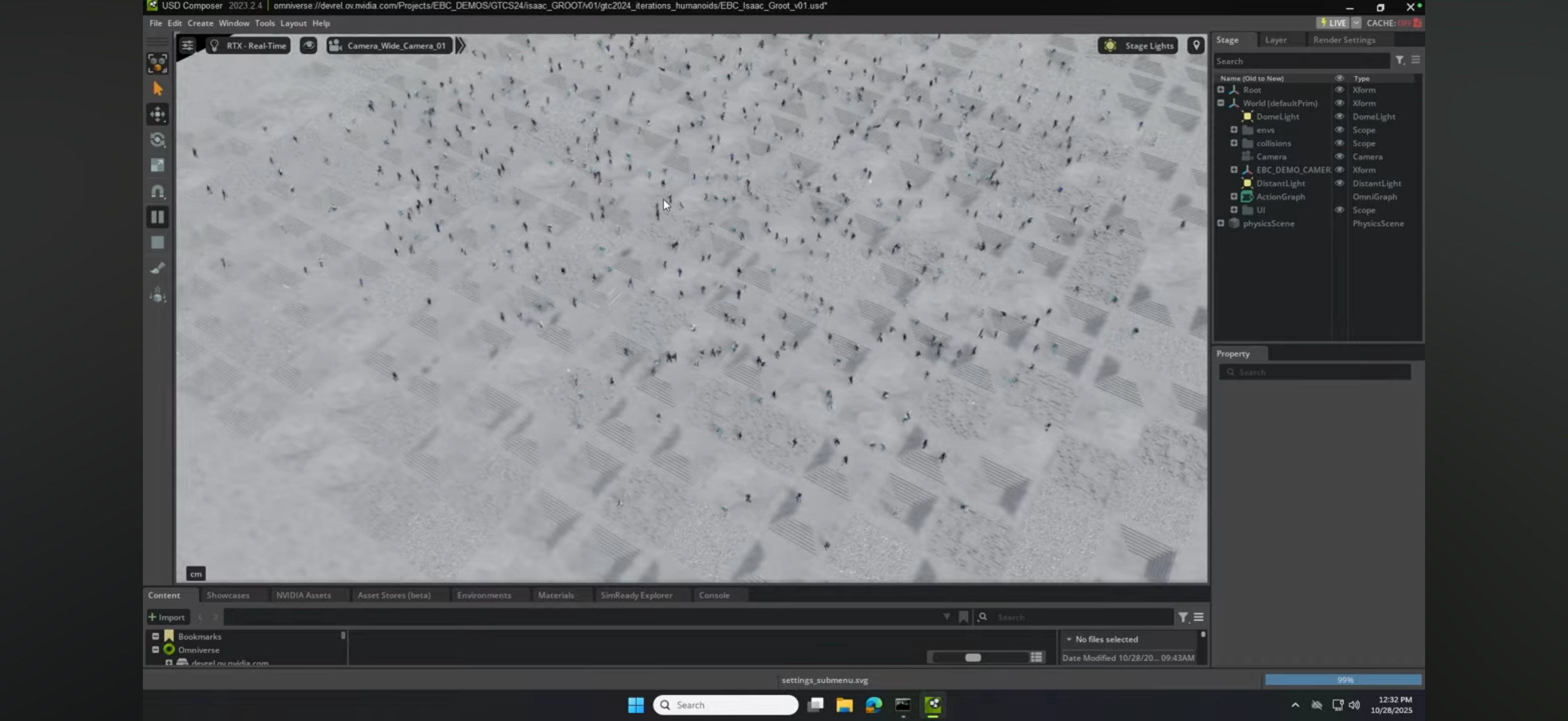

- Simulation platform for Physical AI: NVIDIA provides the edge inference machine “Jetson Nano”, but they also provide “GR00T”, a foundation model for humanoid robots that helps them understand gravity, friction, and path planning within the “Omniverse” simulation environment

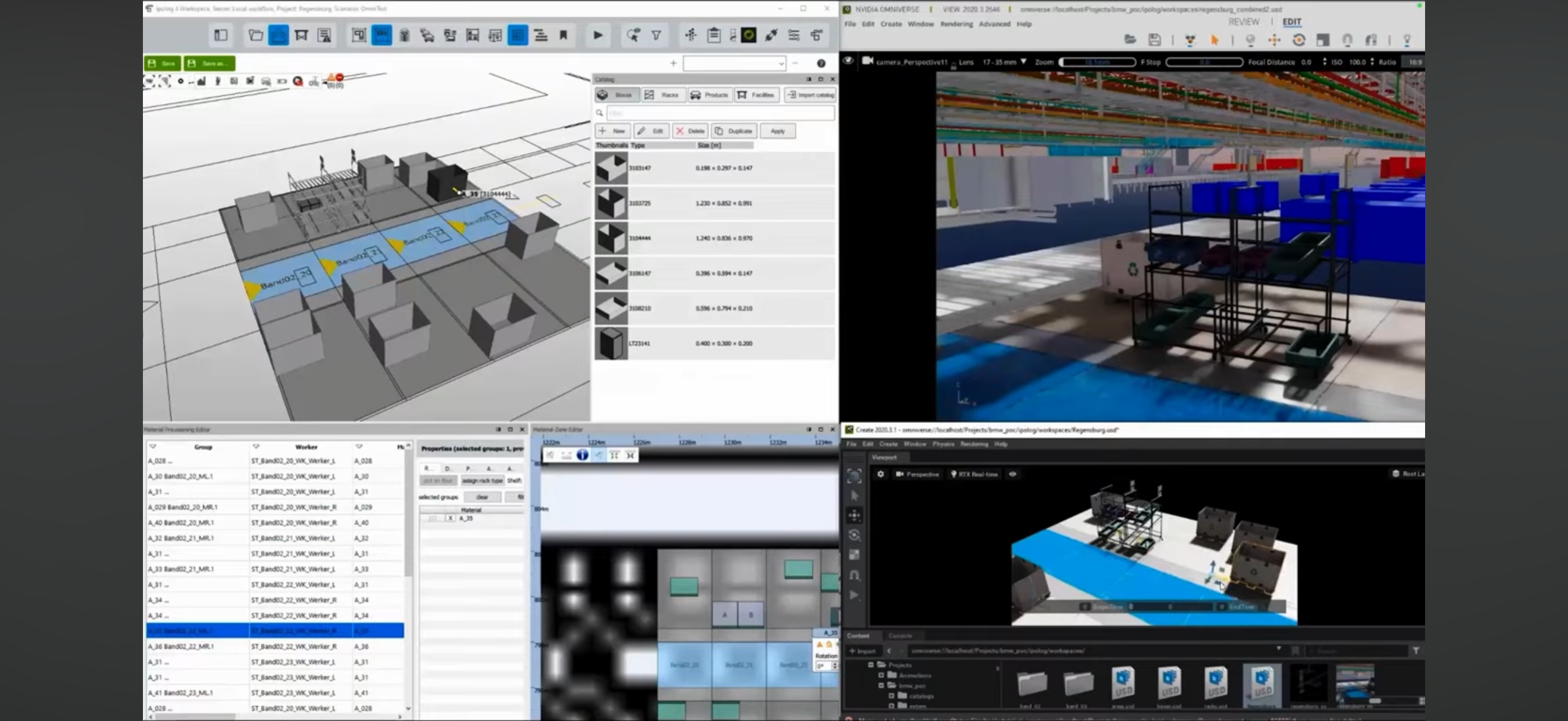

Simulation for factories: Using Omniverse, enterprises can simulate whole factories

Q&A Session

How does a manufacturing customer start building a digital twin?

→Use existing products built by Siemens, Dassault Systèmes, Cadence, etc and import the file into Omniverse

Does NVIDIA “model” the assets itself

→No, we have 3rd party connectors. NVIDIA Open USD files or CAD files

What computations are most heavy?

→Video inferencing is complex. We have a few methods to lower the load, but Video is computationally expensive.

Why aren’t we using more open models?

→Closed models are easy to import. Open models need additional expertise, but for open models the IPs are held by the user/company.