Digesting NVIDIA Physical AI and Robotics Day Summary(1/8)

In October, NVIDIA held a Physical AI and Robotics day in Washington D.C. The keynotes highlight how NVIDIA views the current Physical AI roadmap. These are my notes on what NVIDIA is saying, and what it implies for the future of AI.

The post will be done in 8 parts, each focusing on the public sessions. Let’s dive into the first session, Accelerating the Physical AI Era with Digital Twins and Real-Time simulation by Rev Lebaredian.

For those tl;dr -

- To build Physical AI, you need to build Real World Physical AI. However, real-world physical data is scarce and difficult to obtain.

- NVIDIA proposes NVIDIA Omniverse, a solution based on simulation computing (digital twins and real-time simulation).

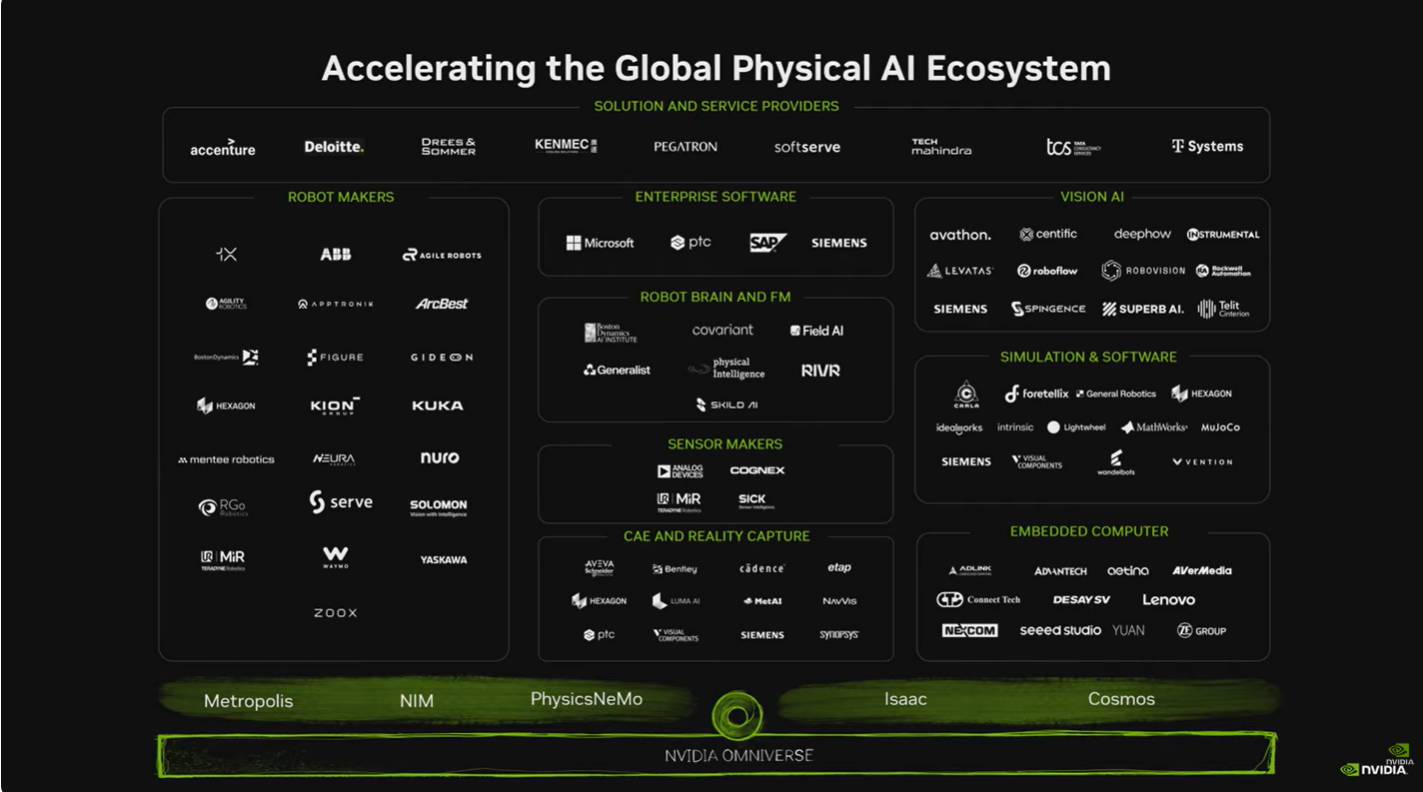

- Was NVIDIA developed 1) Cosmos, a world foundation model, and 2) GR00T, a physical AI foundation model. NVIDIA aims to build a physical AI ecosystem based on the foundation model and simulation platform it provides.

What I think this implies:

- NVIDIA is pushing simulation as a way to address the lack of training data for physical AI. However, digital twins and simulation methods are secondary to building physical AI and are limited by low-quality data.

- With Omniverse, a tool to enable simulation, NVIDIA’s goal is to build an ecosystem for the Physical AI world around COSMOS and GR00T, just as NVIDIA has built the autonomous driving industry around the CUDA ecosystem in the past.

- We’ve heard from the robotics industry that there is a strong need for real-world data to build physical AI. NVIDIA is also investing heavily in robotics companies and support programs (such as NVIDIA Inception).

- I think that robotics platforms that are generating real-world data can get support (=investment) from companies like NVIDIA, Google, Meta, etc. that are trying to build a physical AI ecosystem, even without revenue or performance.

- Just as AI Labs(Open AI, Anthropic, etc.) received massive investment by Big Tech(cloud service providers) early on, a similar industry pattern might already be emerging for robotics companies and Big Tech.

- However, robot platform manufacturers are characterized by a very narrow form factor (dependent on their own hardware), so it is doubtful that they can utilize a “data arbitrage play” within the physical AI space.

- In the future if a “Data Arbitrage Play” is possible, it should look something like Tesla trying to sell FSD. Tesla has perfected its 13-camera E2E autonomous driving platform FSD, and is becoming the standard for autonomous driving. Tesla is attempting to license FSD to other manufacturers. I assume General Robotics developers may also be able to use the same playbook once the shape and form factor of the general robot(=humanoid) is standardized.

Full Presentation

- Rev Lebaredian is the Vice President of Ominverse & Simulation Technology at NVIDIA.

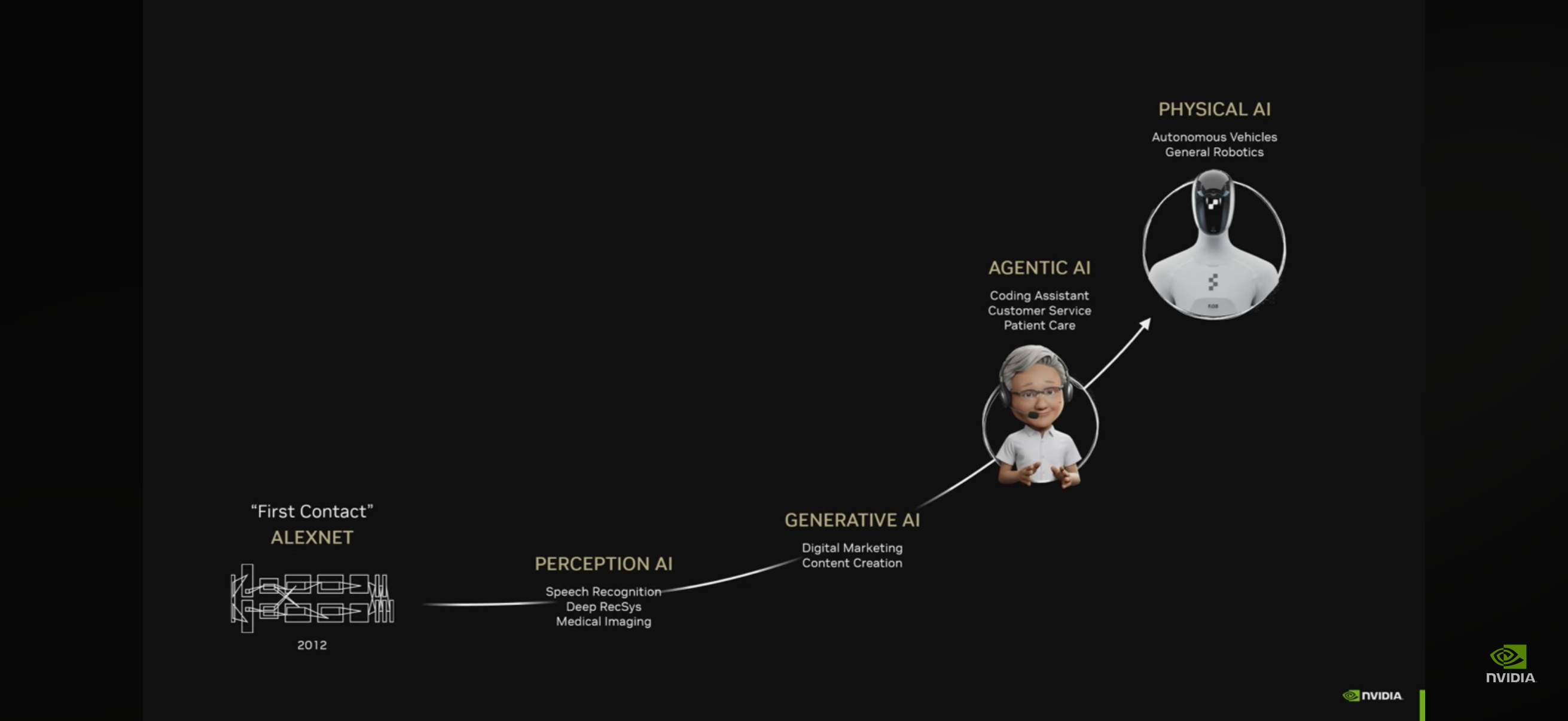

- Computer Science is now an era of throwing a bunch of data at a supercomputer and letting the computer build the model, rather than developers building the model themselves.

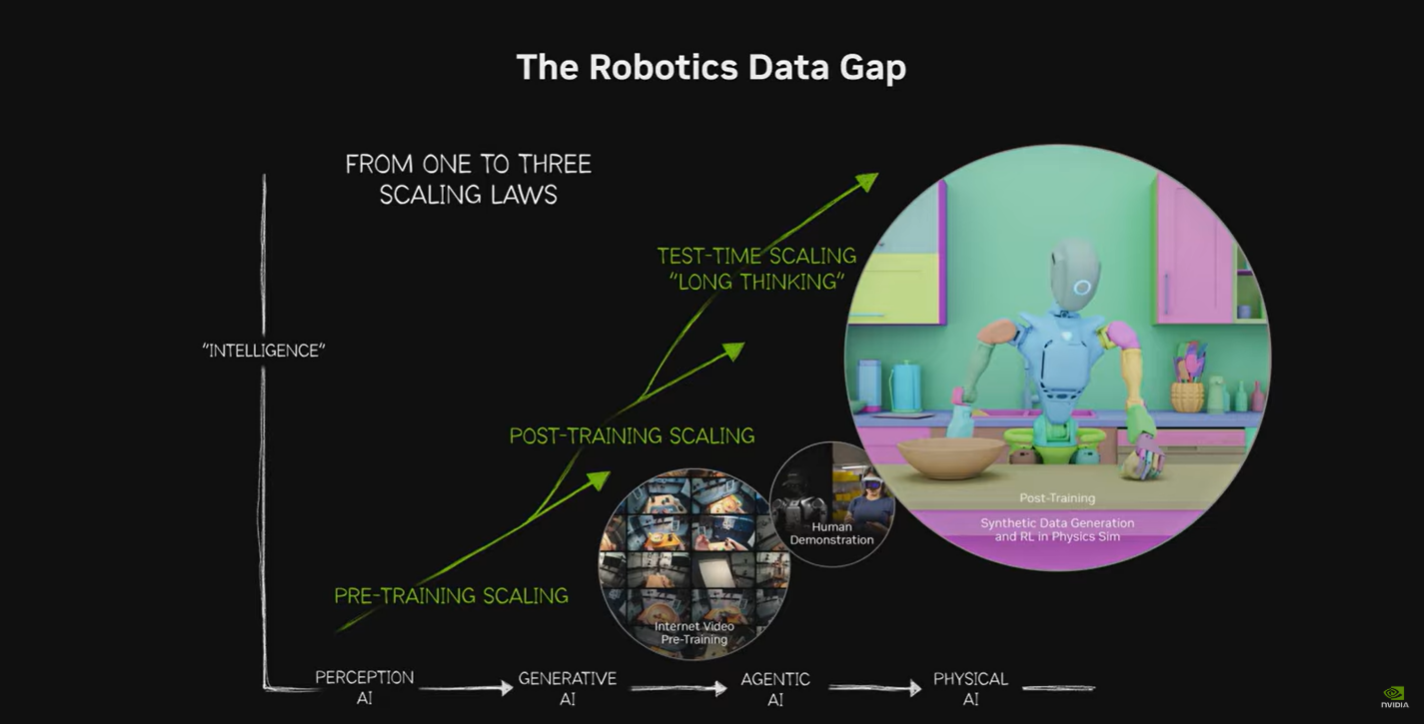

- Starting with ALEXNET, where image classification was first possible, → Perception AI (based on deep learning) → Generative AI (based on Transformers) → Agentic AI (now enabling autonomous decision-making)

- Soon, the era of Physical AI, where AI with a physical form perceives and acts in the world, will open. It will have a much greater impact because it is breaking through the limitations of virtual space (IT Space).

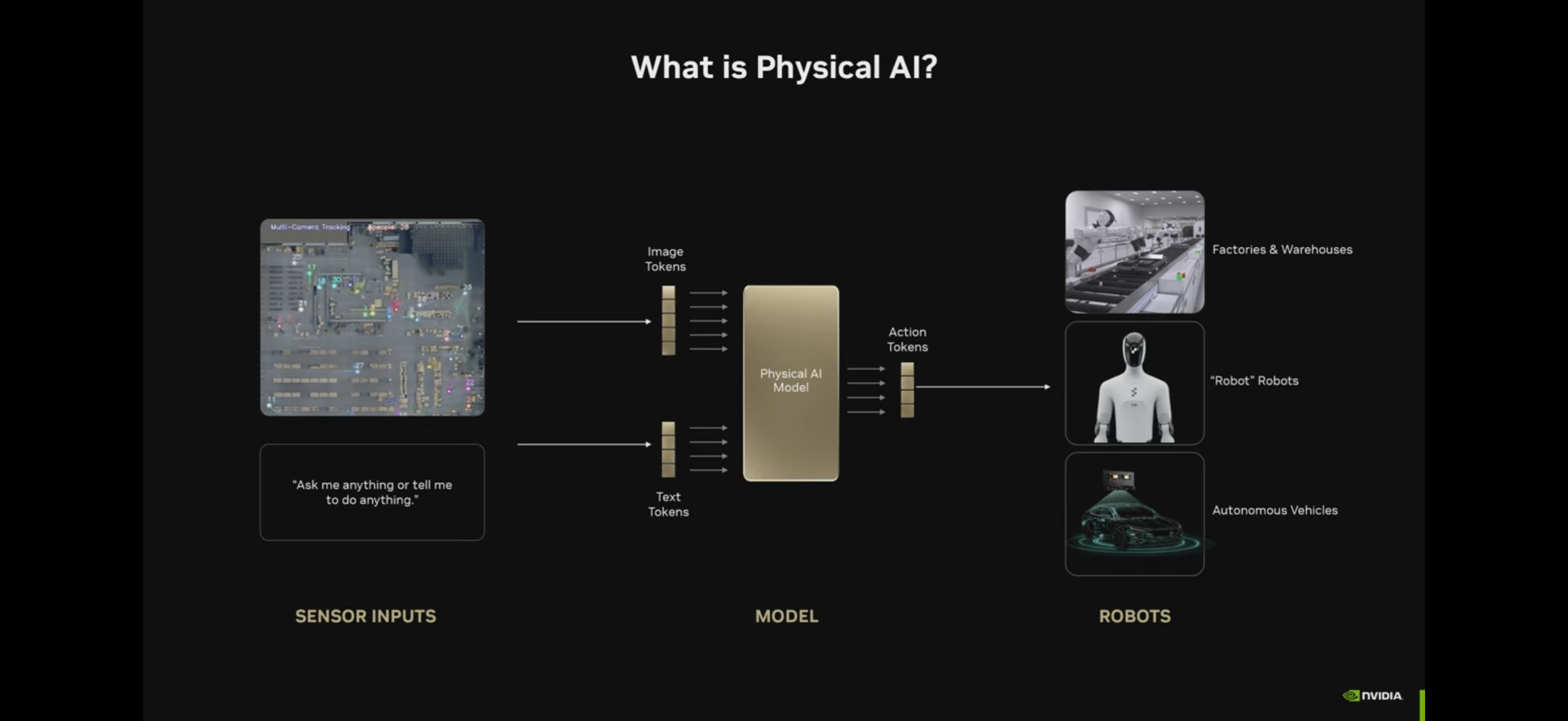

Physical AI is a form in which inputs (mainly image + text) are put into tokens and action tokens come out, and it controls robots.

In other words, it is understood that we are still using the Transformation architecture.

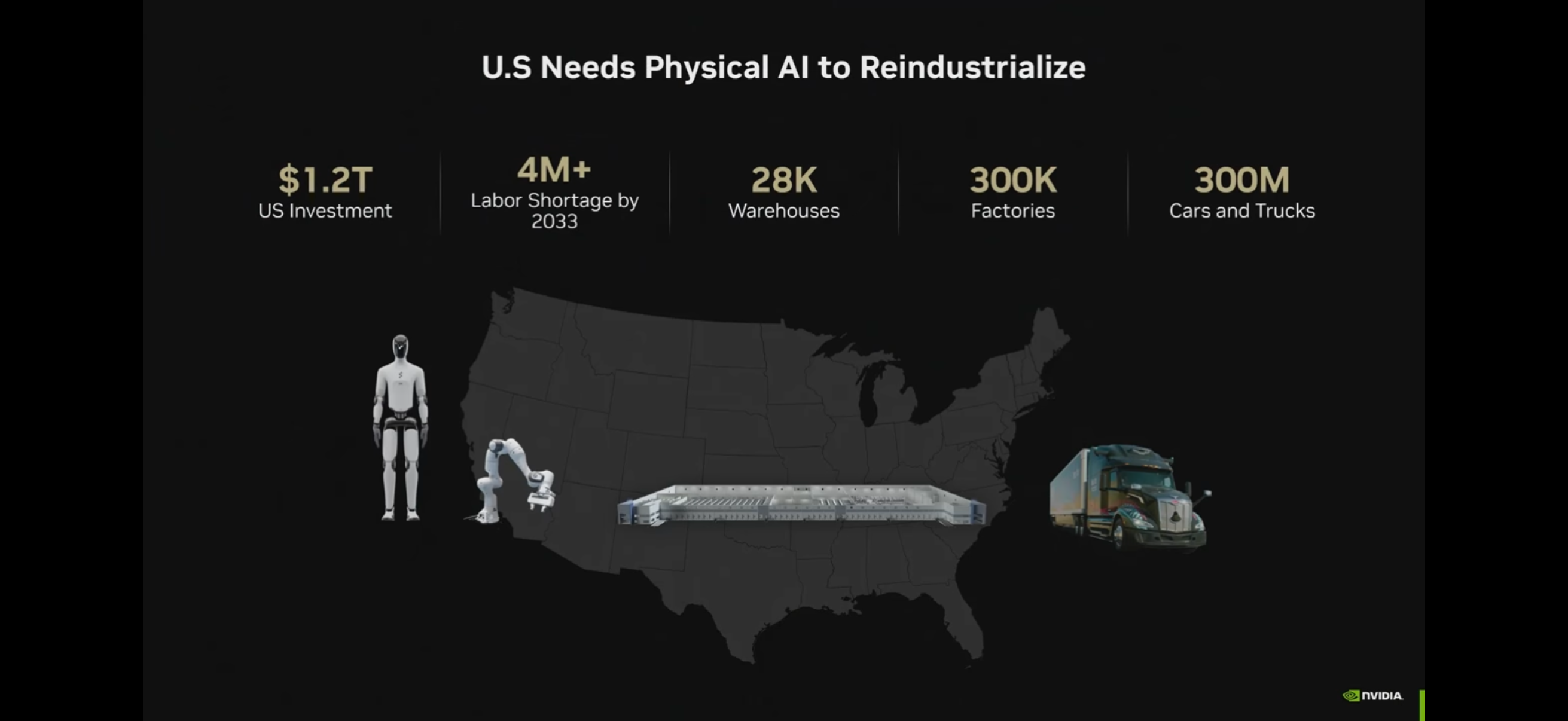

NVIDIA believes that the three most important robots are: 1) factories/warehouses 2) “steamed” robots 3) self-driving cars.

- The U.S. needs a lot of robots to maintain + grow its productivity.

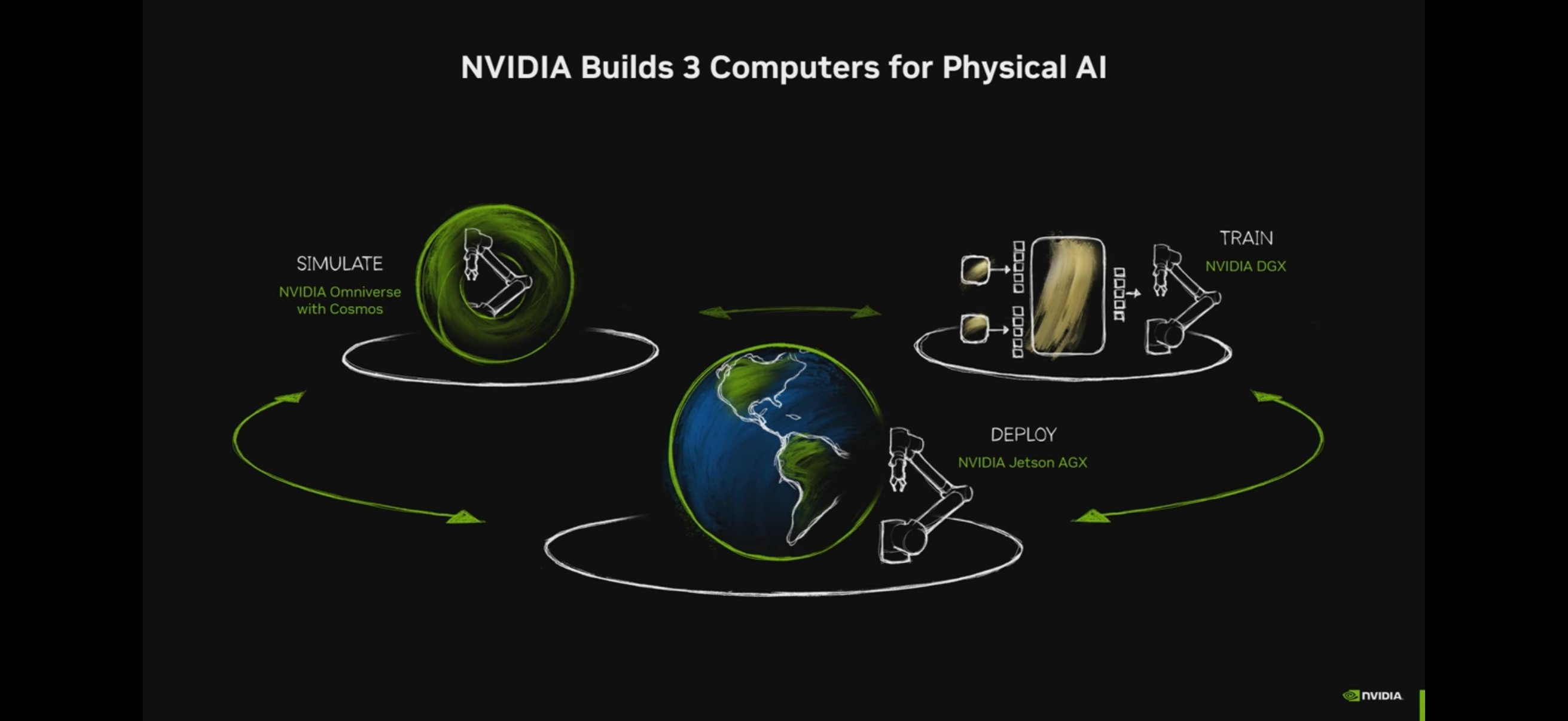

There are 3 types of computers (or computing) that NVIDIA offers

Deploy: Edge-end computers (usually Inference Computing) that run inside robots like Jetson, AGX, Thor, etc.

Train: computers for training (learning) models, which is NVIDIA’s current main business.

Simulate: the main topic of this announcement, a Simulation Computer. Nvidia calls it the Omiverse Computer. The idea is to create a simulation with a model developed by putting synthetic data based on the laws of the physical world into a virtual world.

Why simulation is necessary: Real-world physical data is difficult to obtain, and simulation is important due to its physical nature.

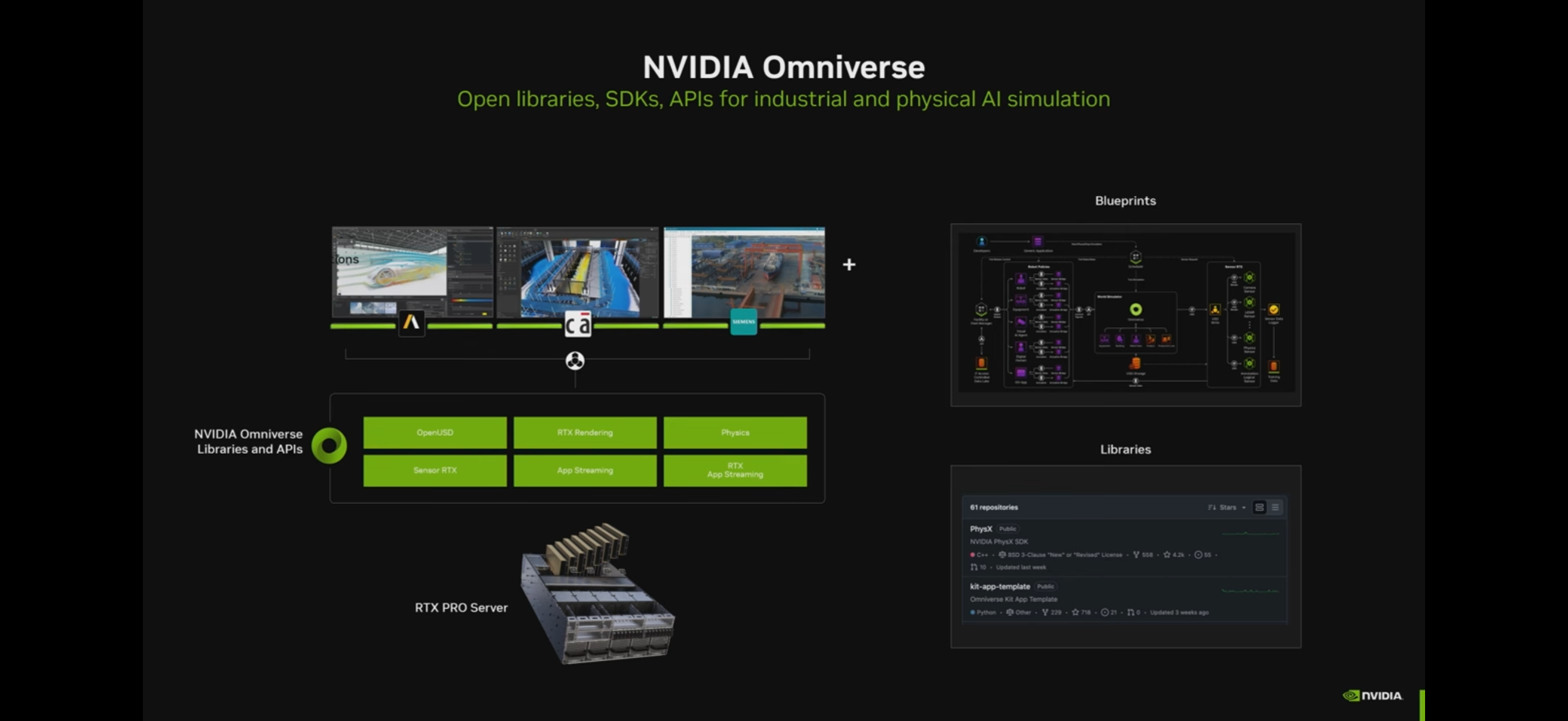

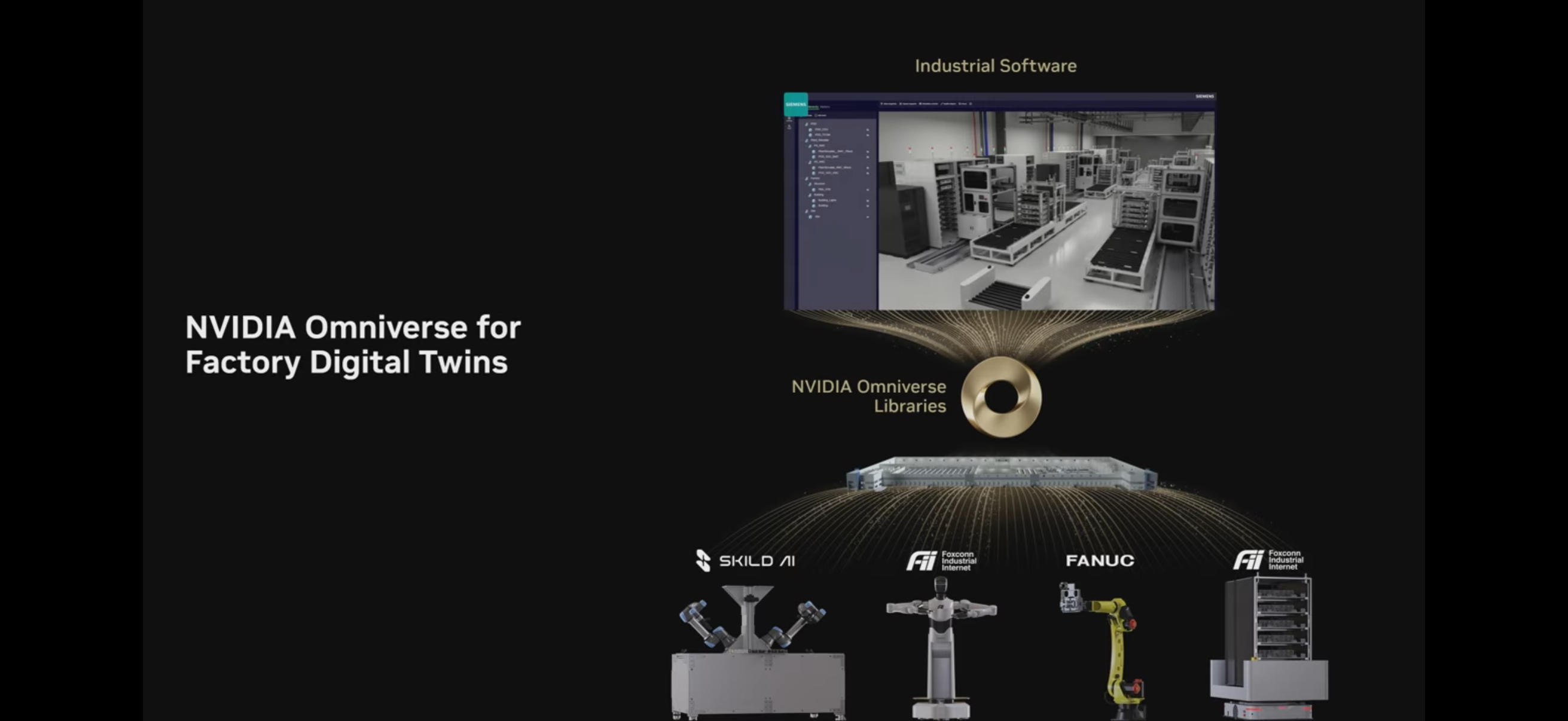

- What is NVIDIA Omniverse: A set of libraries and APIs provided by NVIDIA that allows customers who want to build physical AI to build models/applications on top of it.

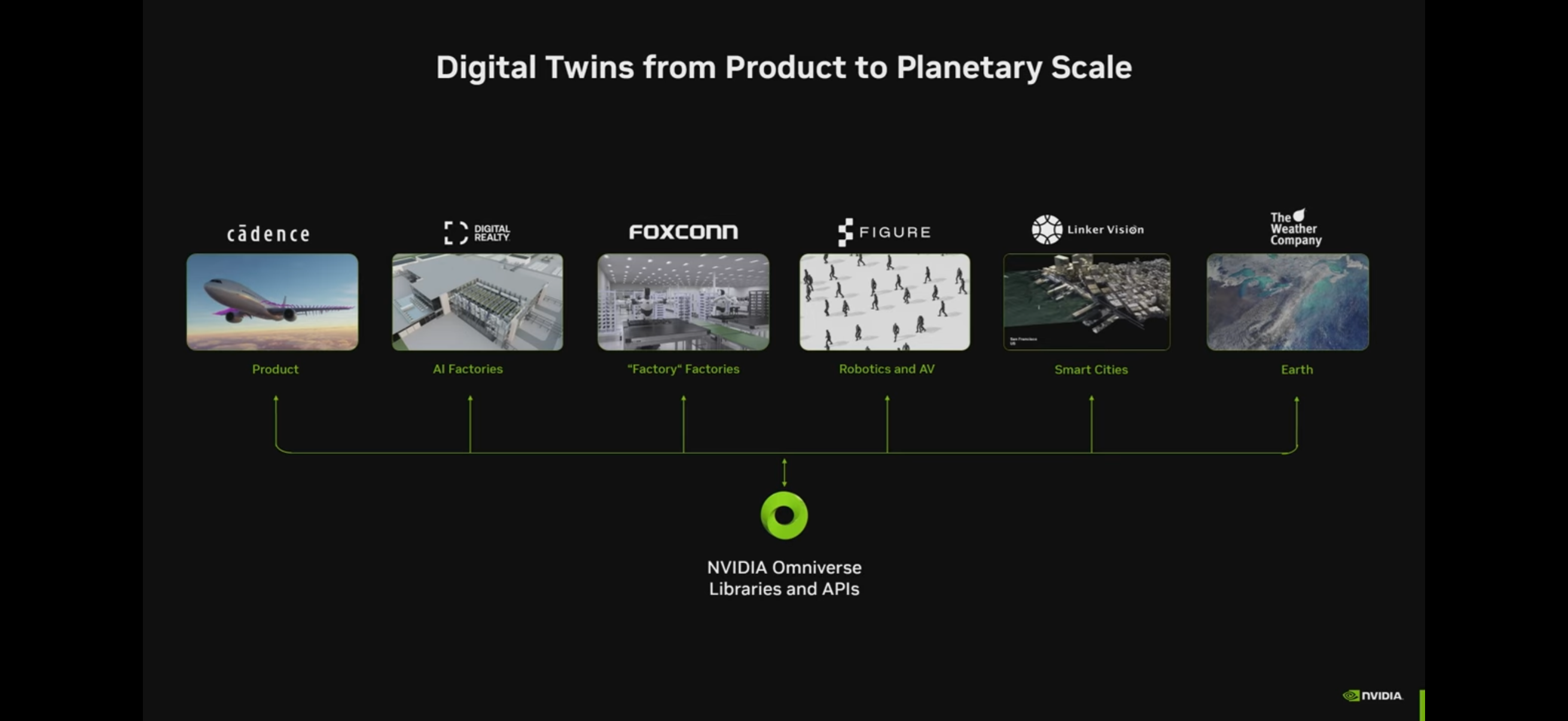

- Omniverse application areas: Product, AI Factory, “factory” factory, robotics, smart city, earth simulation (weather)

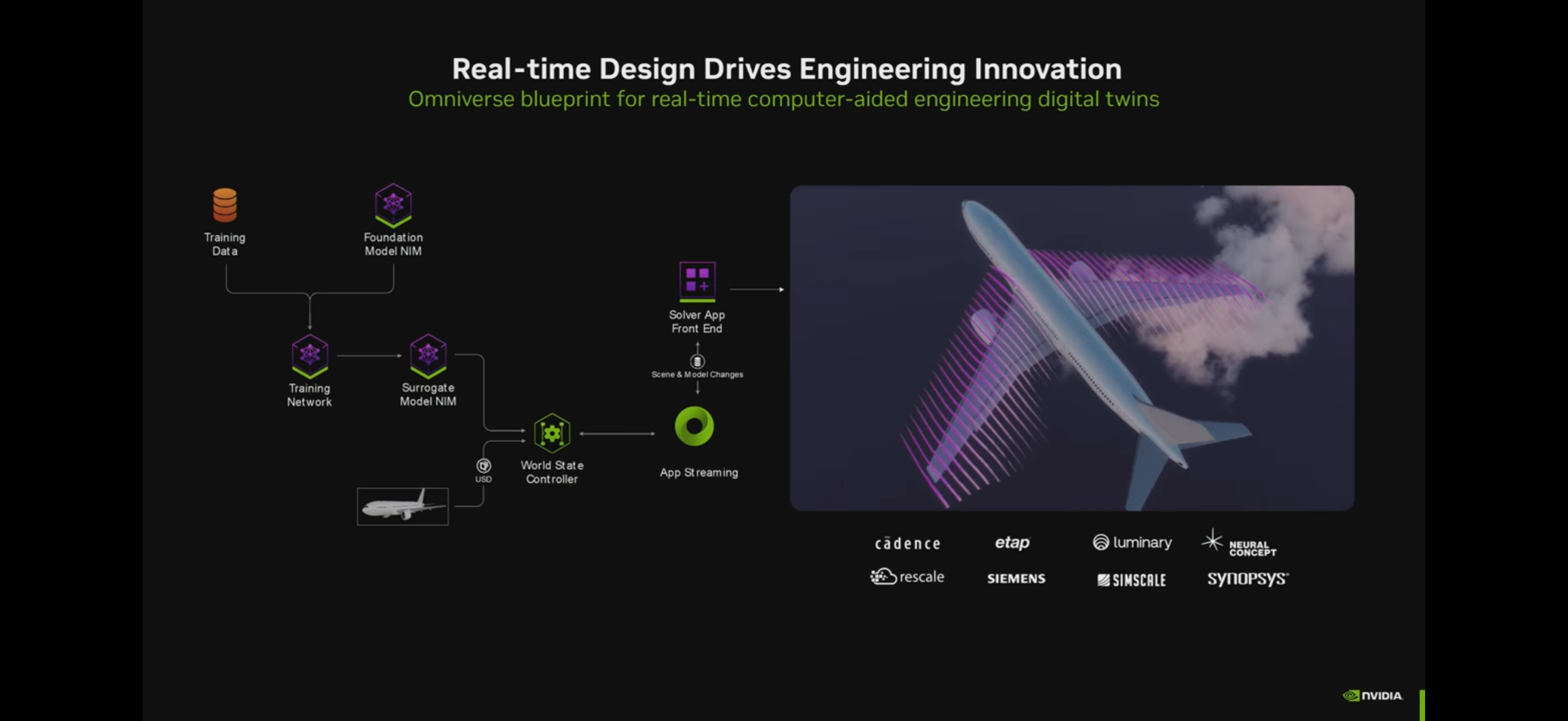

- Fluid dynamics applications: Omniverse provides the basic framework for CAE digital twins

- “Steamed” factories: Siemens, in collaboration with NVIDIA, launched the “Digital Twin Builder”, which allows you to build a digital twin of a smart factory.

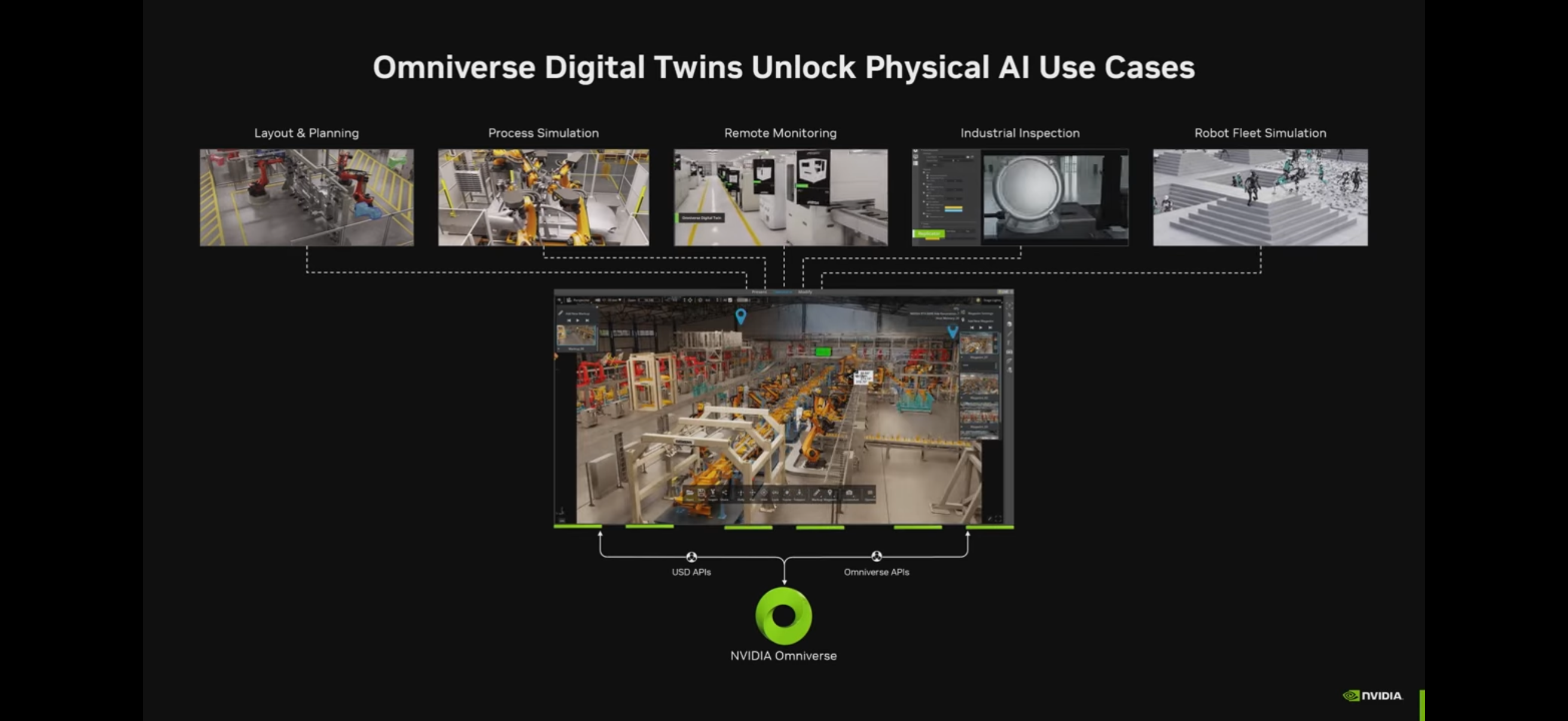

- “Factory” factory details: Omniverse-based smart factory functions (Layout & Planning, Process Simulation, Remote Monitoring, Industrial Inspection, Robot Fleet Simulation)

- Featured examples: BMW - Factory Digital Twin, KION - Logistics Automation, Schaffler - Digital Twin with Robots, Quanta - Process Digital Twin, TSMC - Process Digital Twin

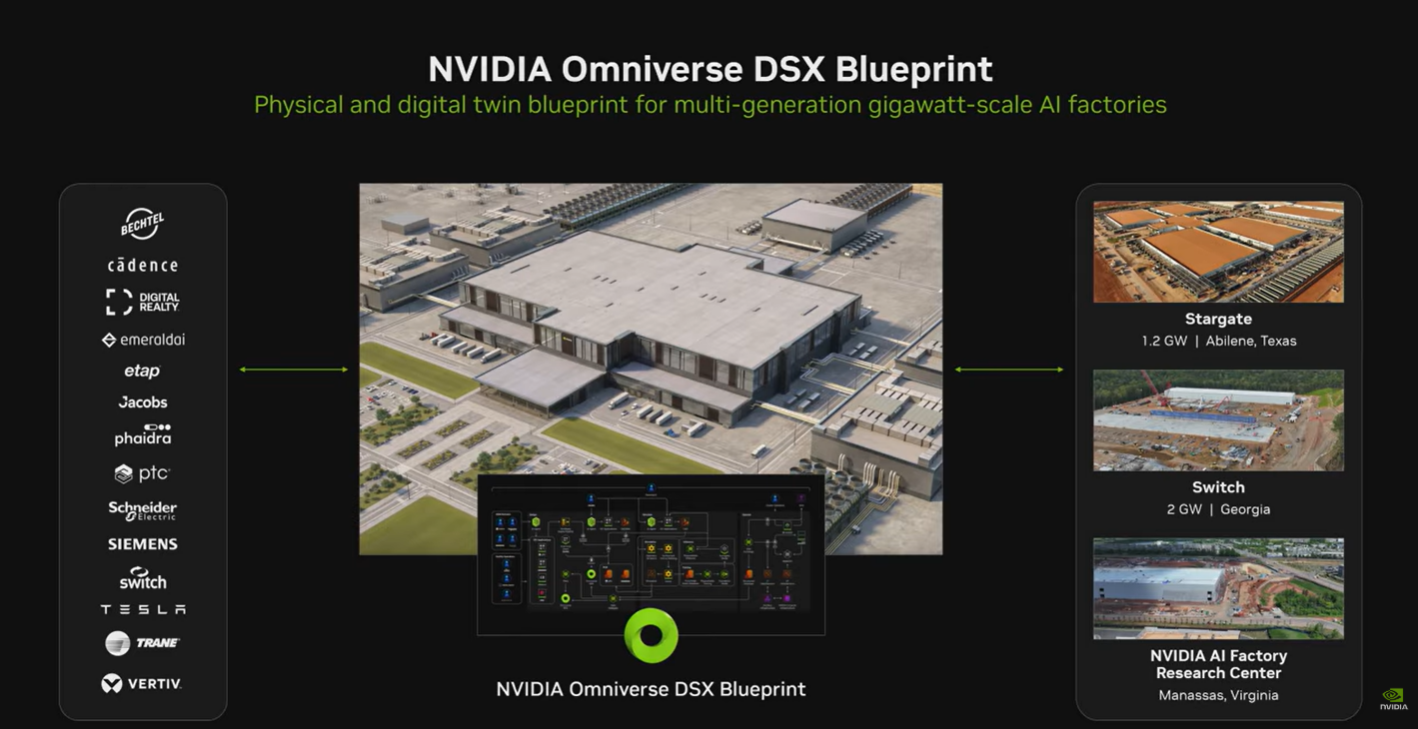

- AI Factory: Provides digital twins required to build and operate AI data centers, such as load, thermal, etc.

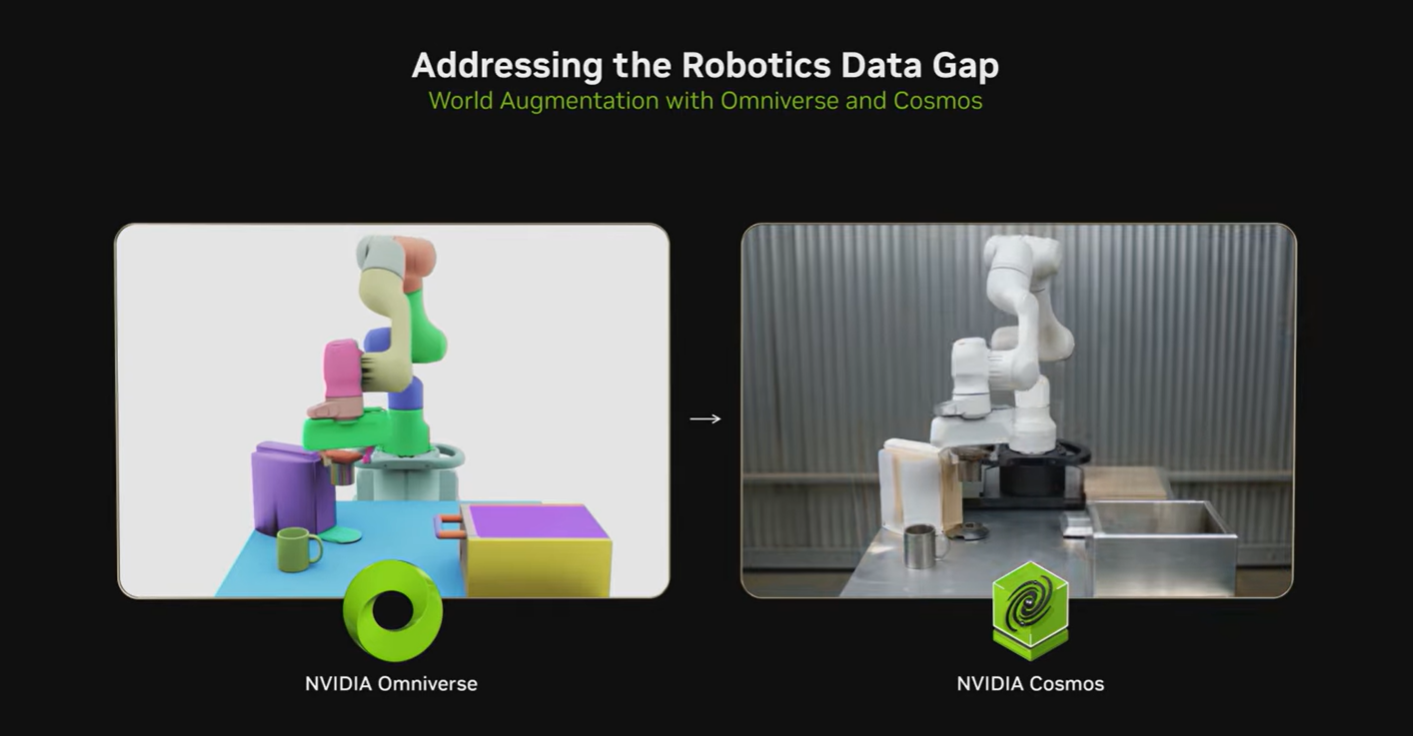

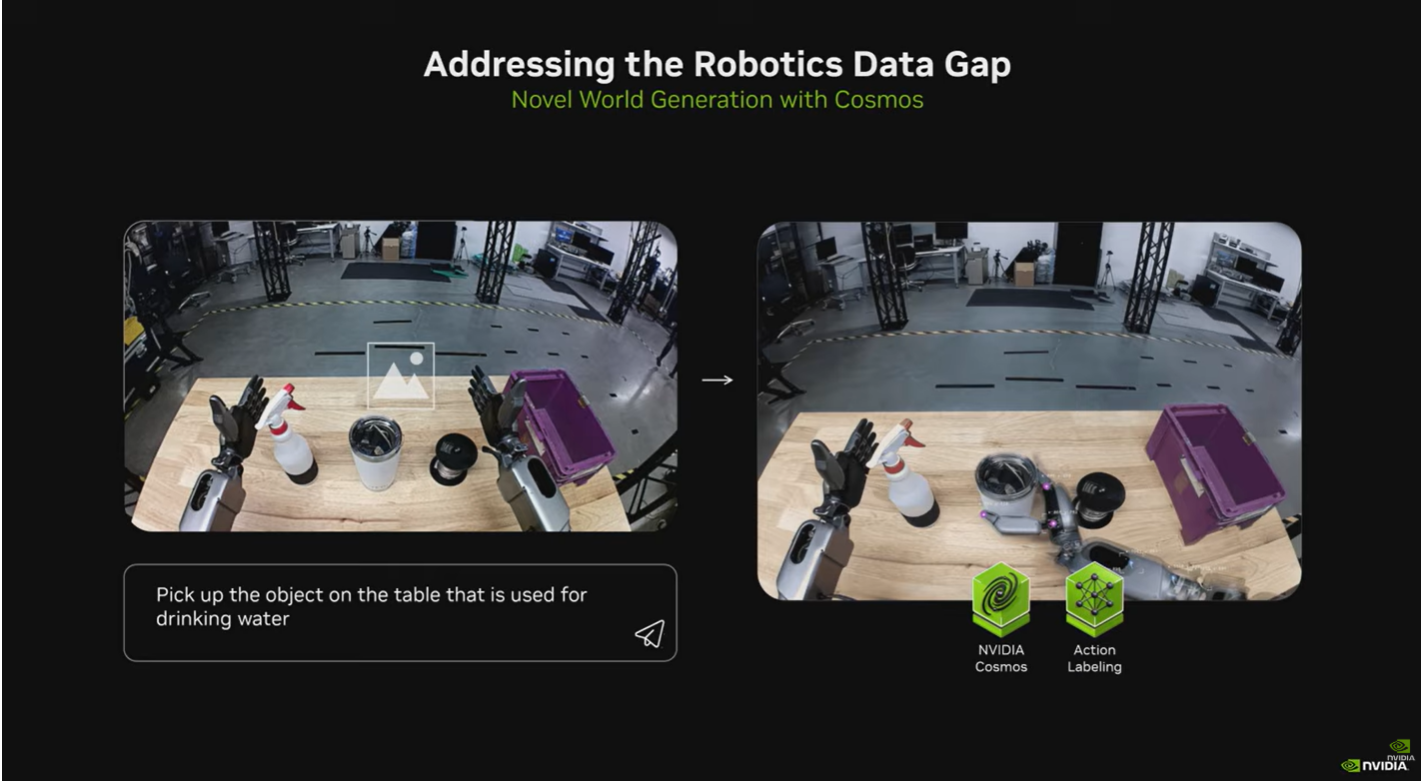

- The Robotics Data Gap: (Initially) robot data includes a variety of sensors, but no behavioral data. There is a big problem in building AI with no existing database.

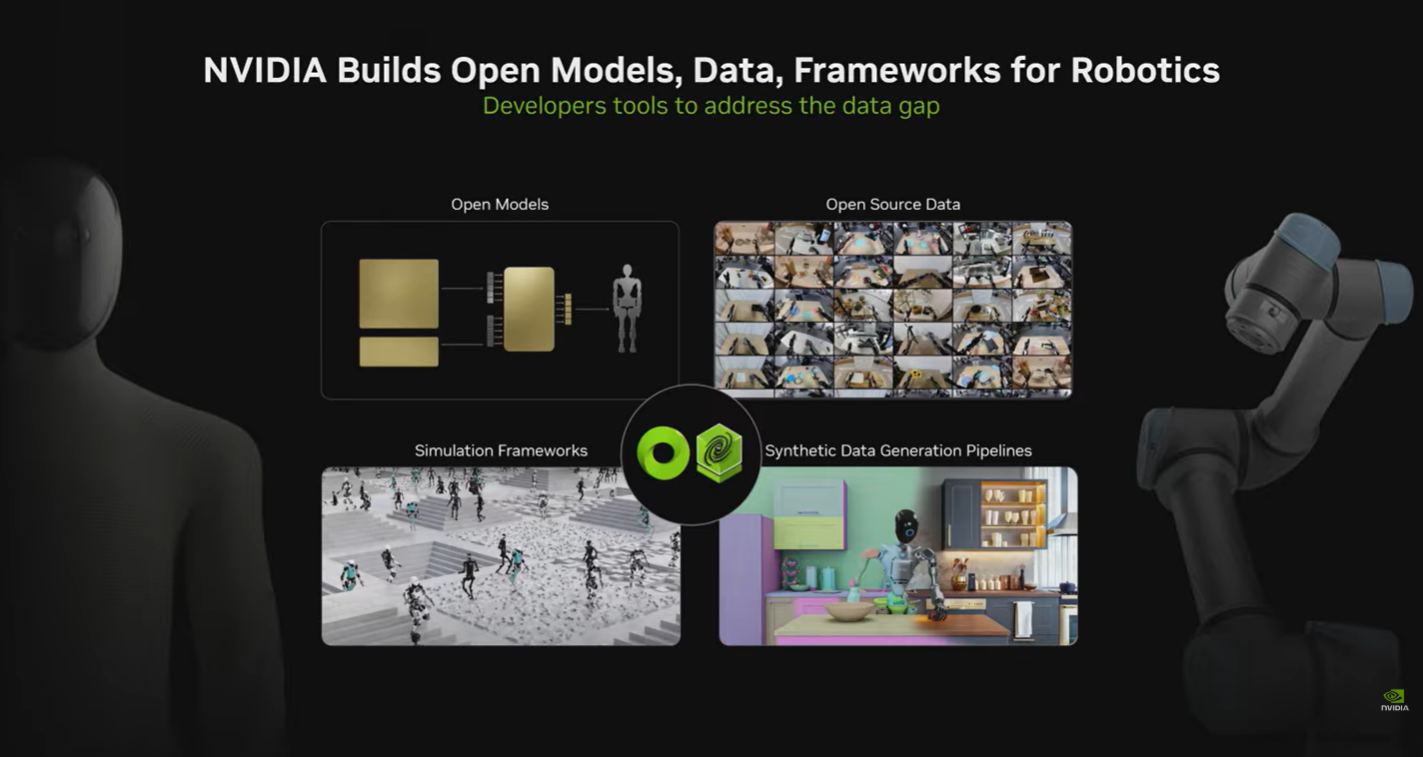

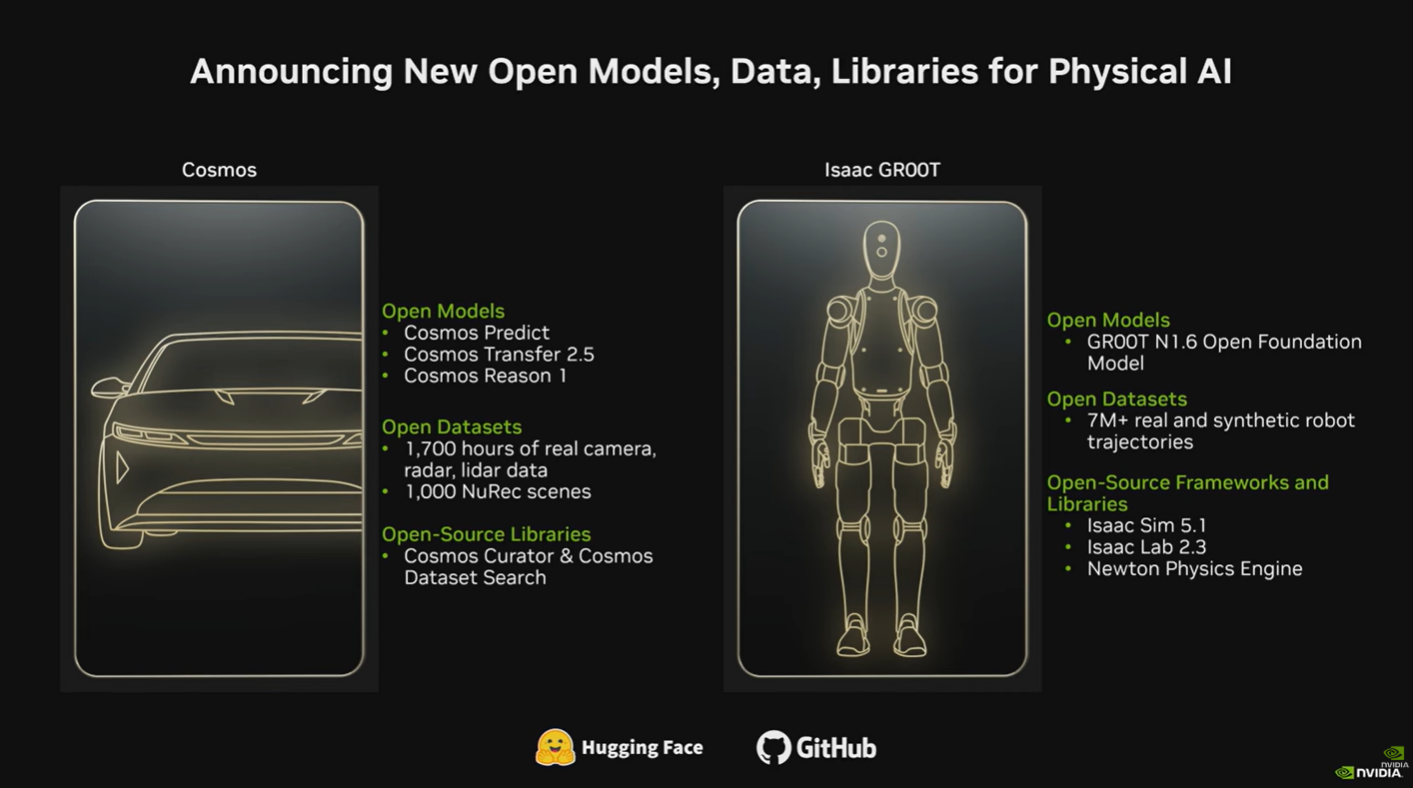

NVIDIA is currently providing a free foundation model with pipelines and frameworks to develop Physical AI. NVIDIA has two foundation models for physical AI

Cosmos: World Foundation Foundation Model

GR00T: Physical AI Foundation Model

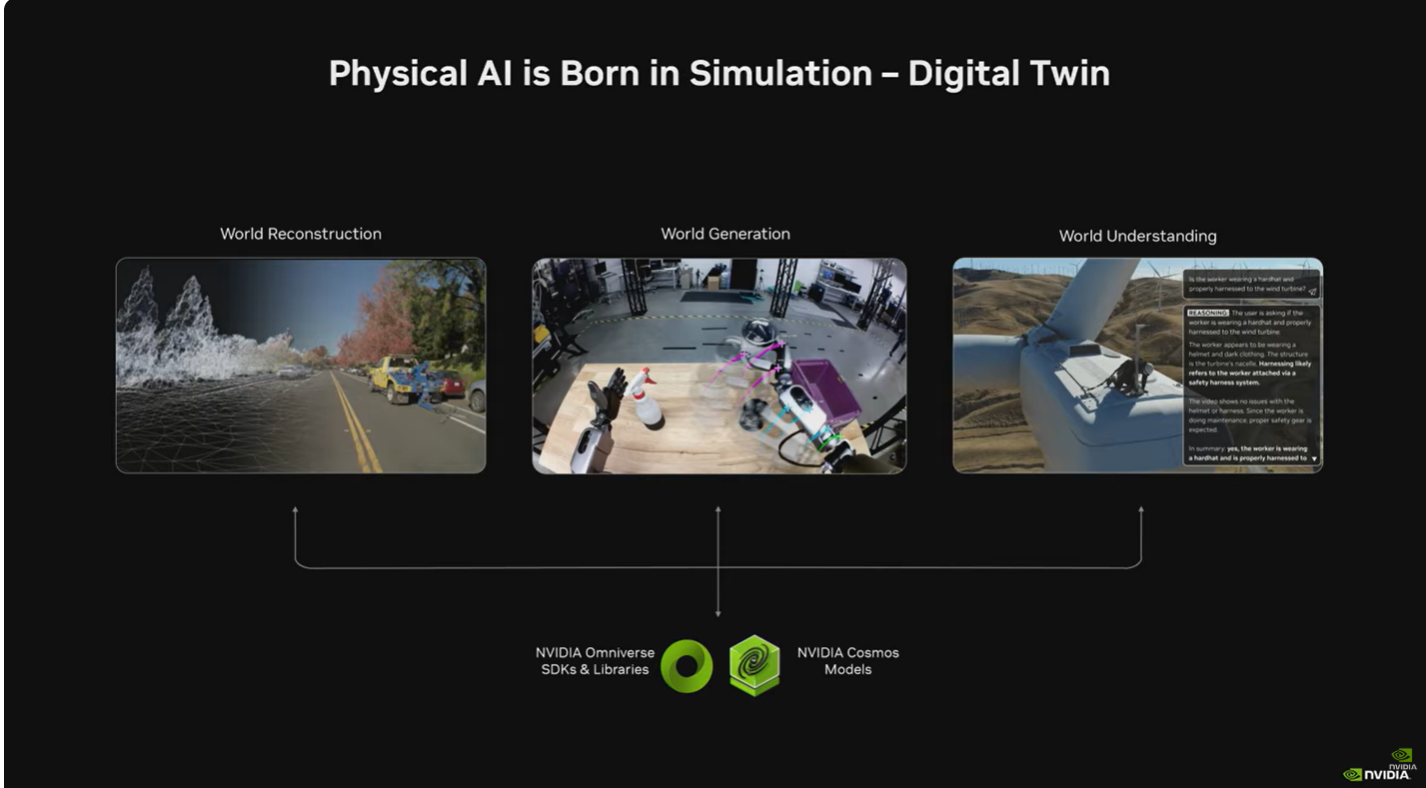

- Structure of Simulation: World Reconstruction, World Generation, and World Understanding, and feedback based on the data generated to improve performance.

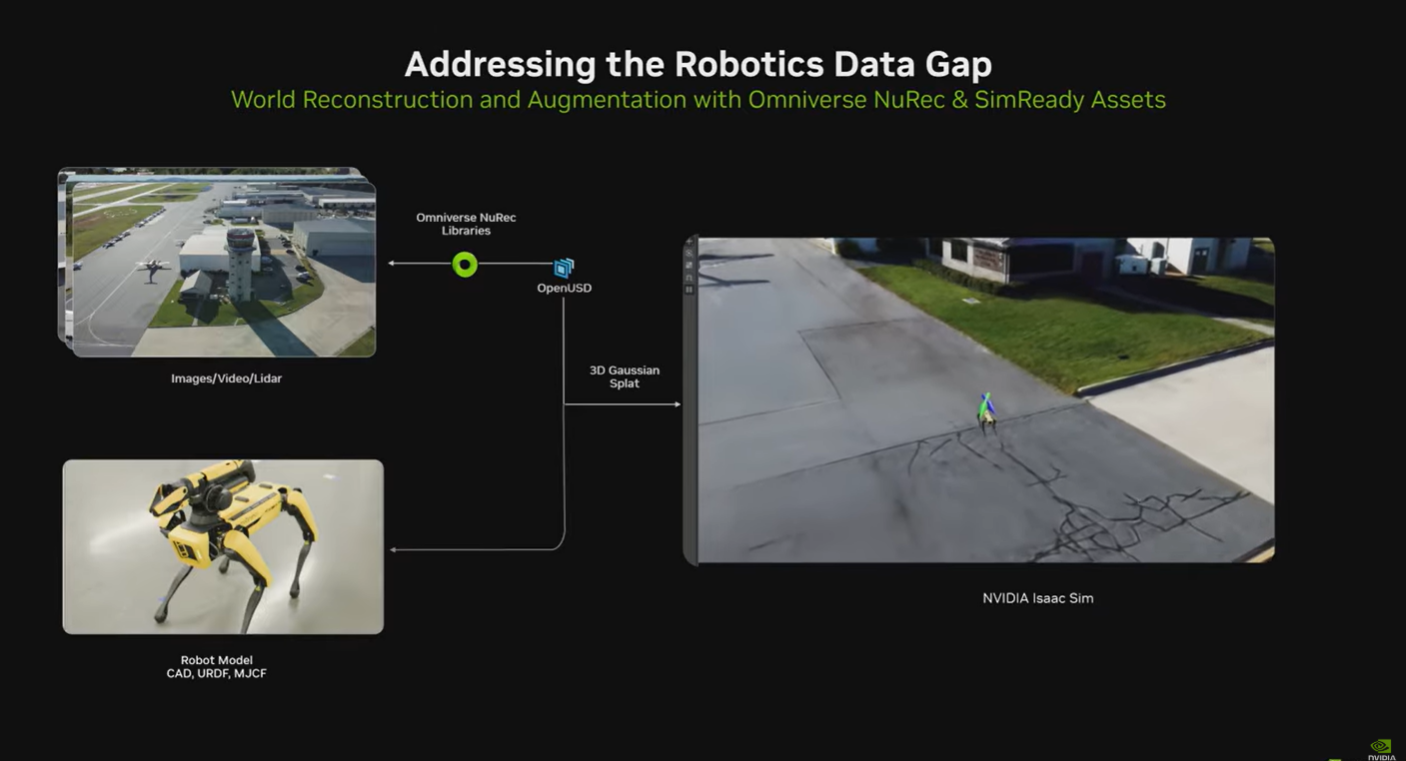

- Example of using Real to Sim: Data collected by various existing sensors (cameras, etc.) in the real world → Reconstructed into simulation (Isaac Sim) → Can be utilized in various applications

- Example of Sim to Real use: Create a simulation based on Omniverse’s Physics Solver and put it into NVIDIA Cosmos, a world model, to learn various environments (simulating various situations such as lighting, background, etc.)

- COSMOS Predict: The Action prediction model applied to the Vision-Language model can be implemented.

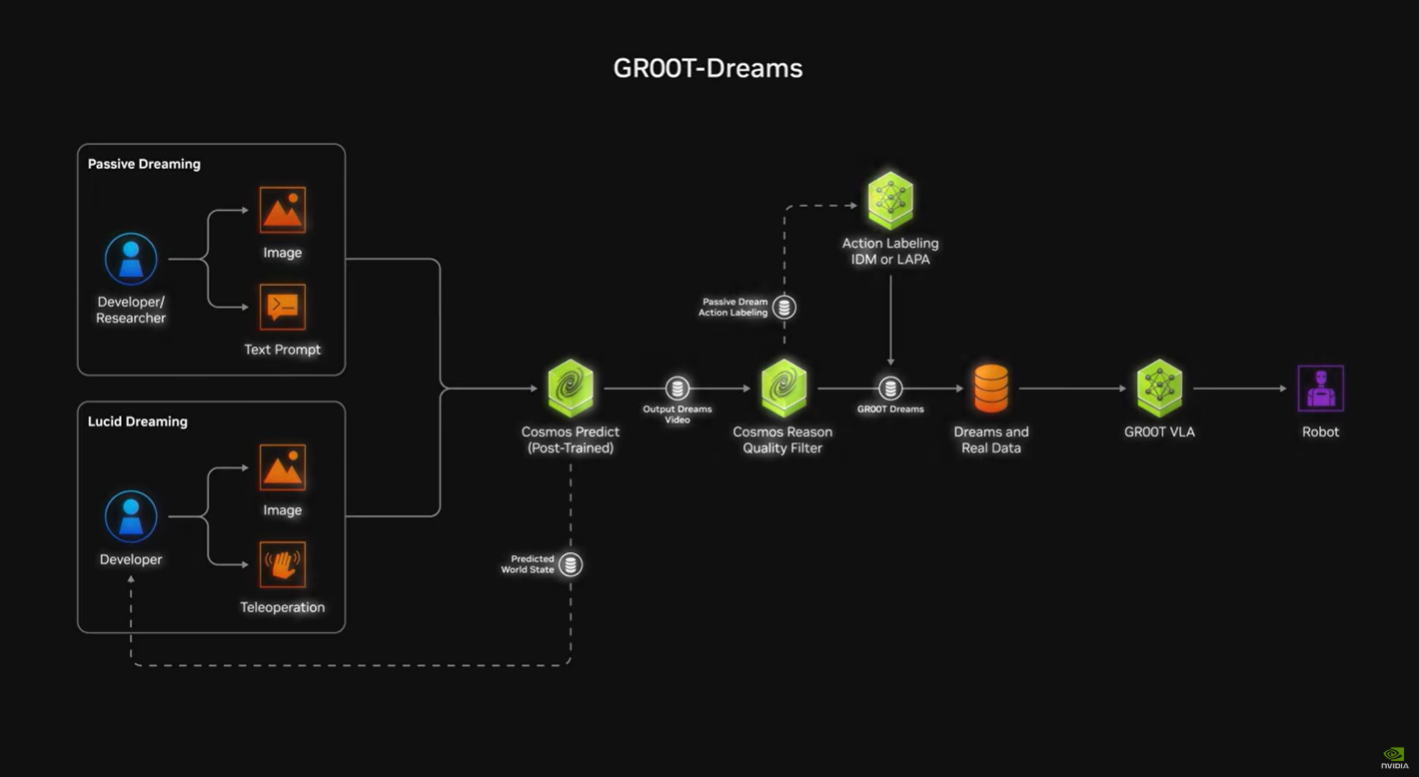

- NVIDIA calls it GR00T Dreams, and it can generate a very large amount of robot behavior simulation data.

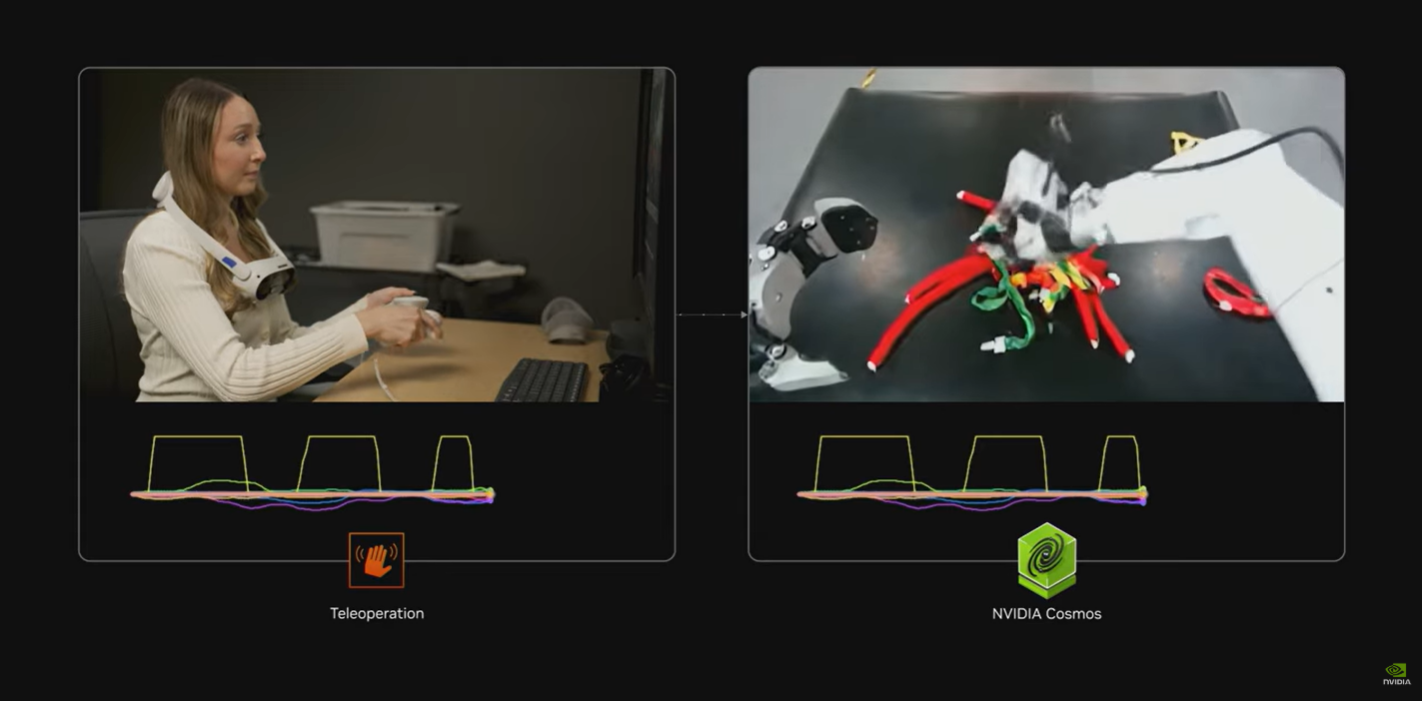

- Teleoperation: Data created only by GR00T Dreams (Unsupervised) method is insufficient; Supervised Training through Teleoperation linked with NVIDIA Cosmos is also possible.

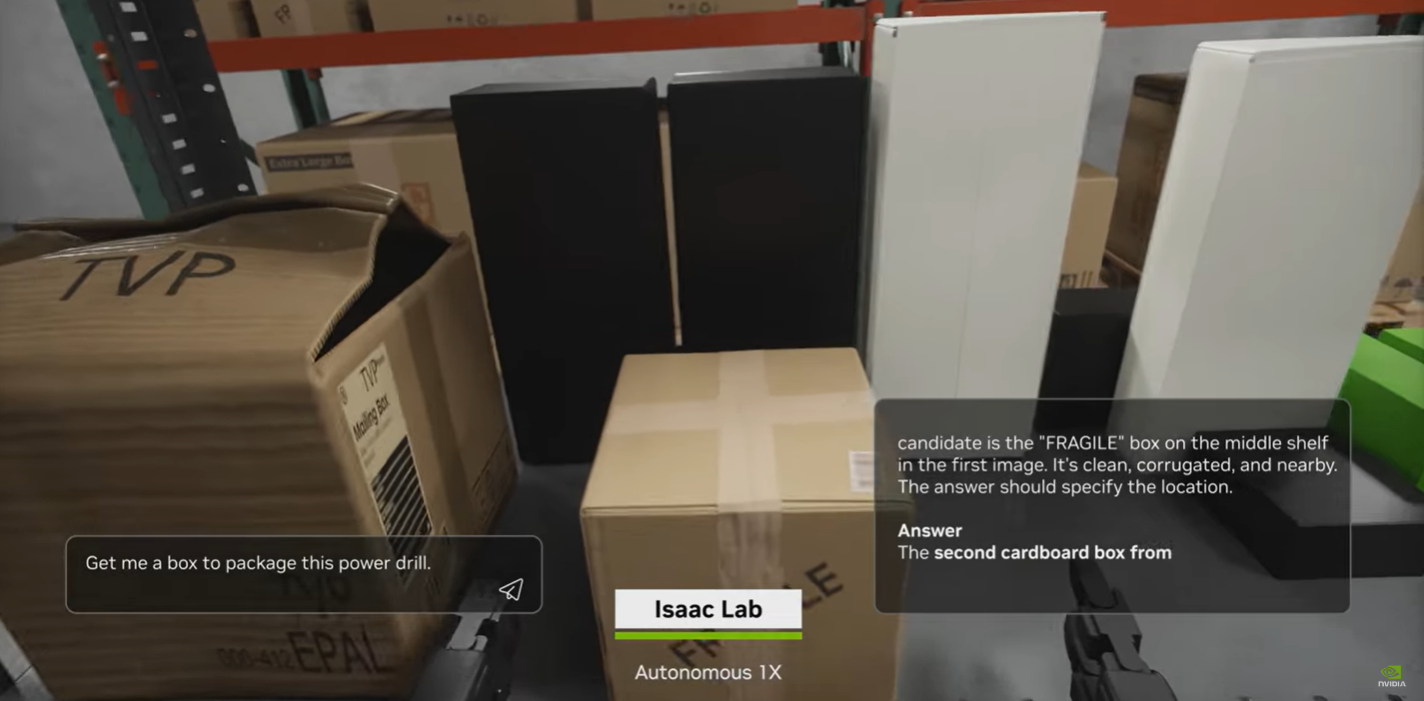

- Trained models can be run for simulation in Isaac Lab

- NVIDIA has released the latest models of Cosmos and GR00T

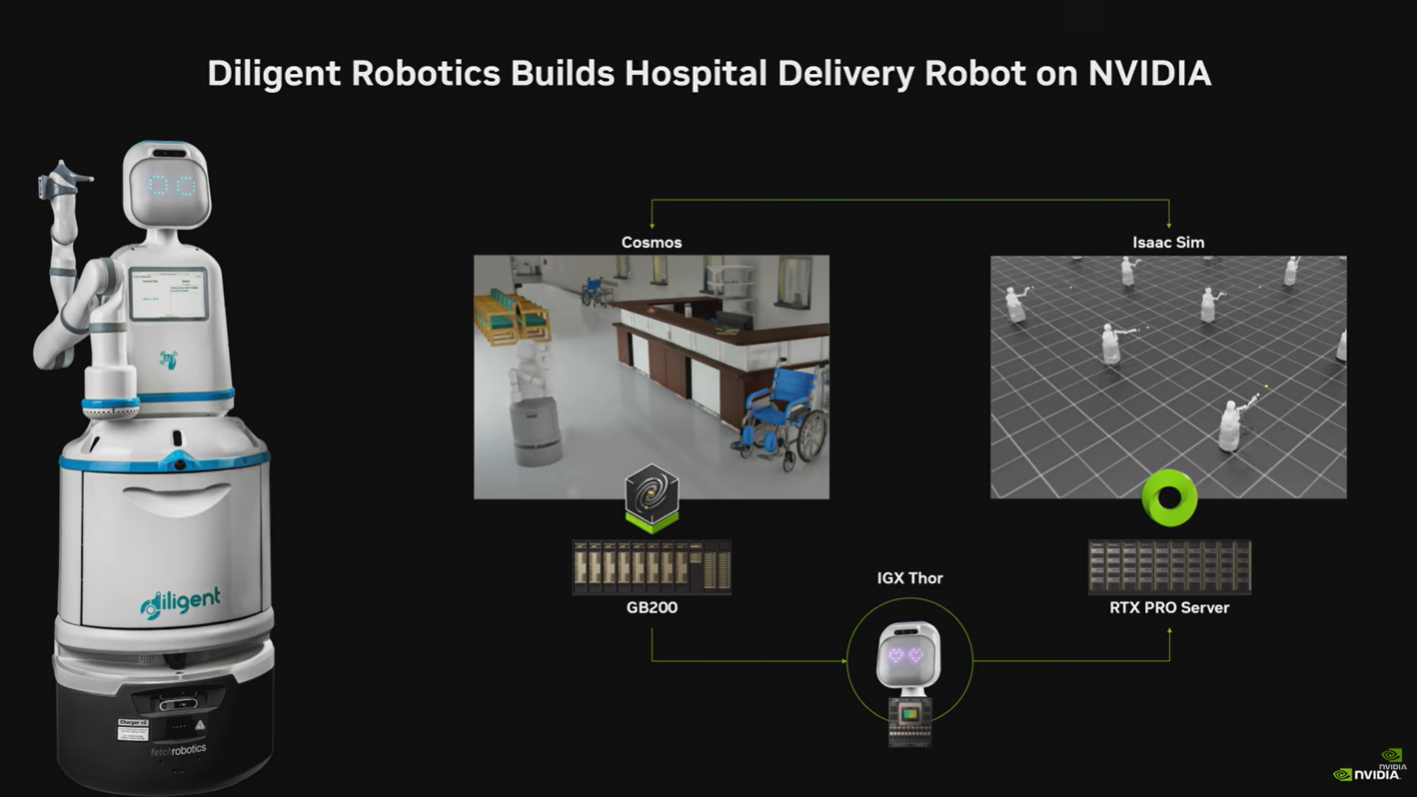

- Delivery robot example: Diligent Robotics uses Cosmos and Isaac Sim together

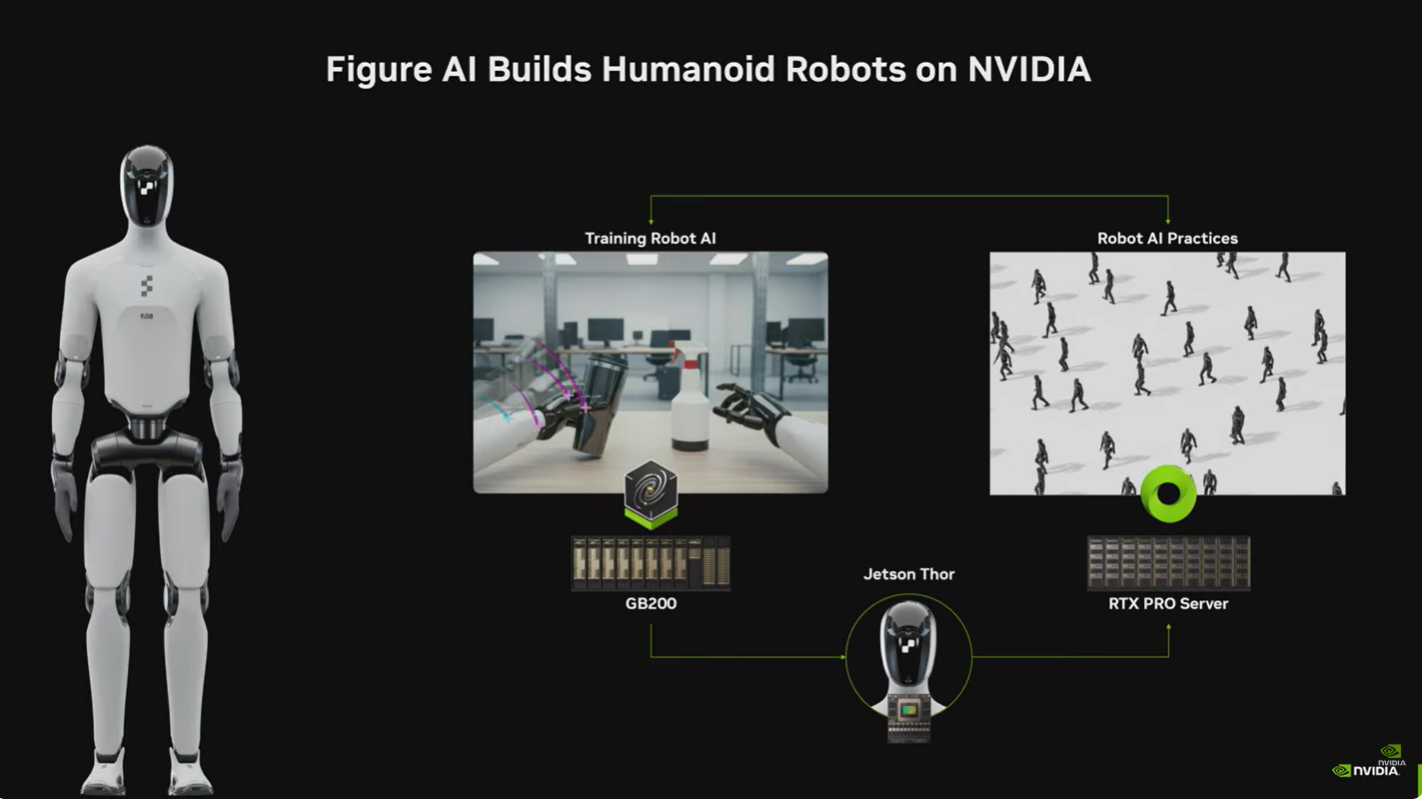

- Humanoid Story: Figure AI uses NVIDIA infrastructure for robot training and robot simulation

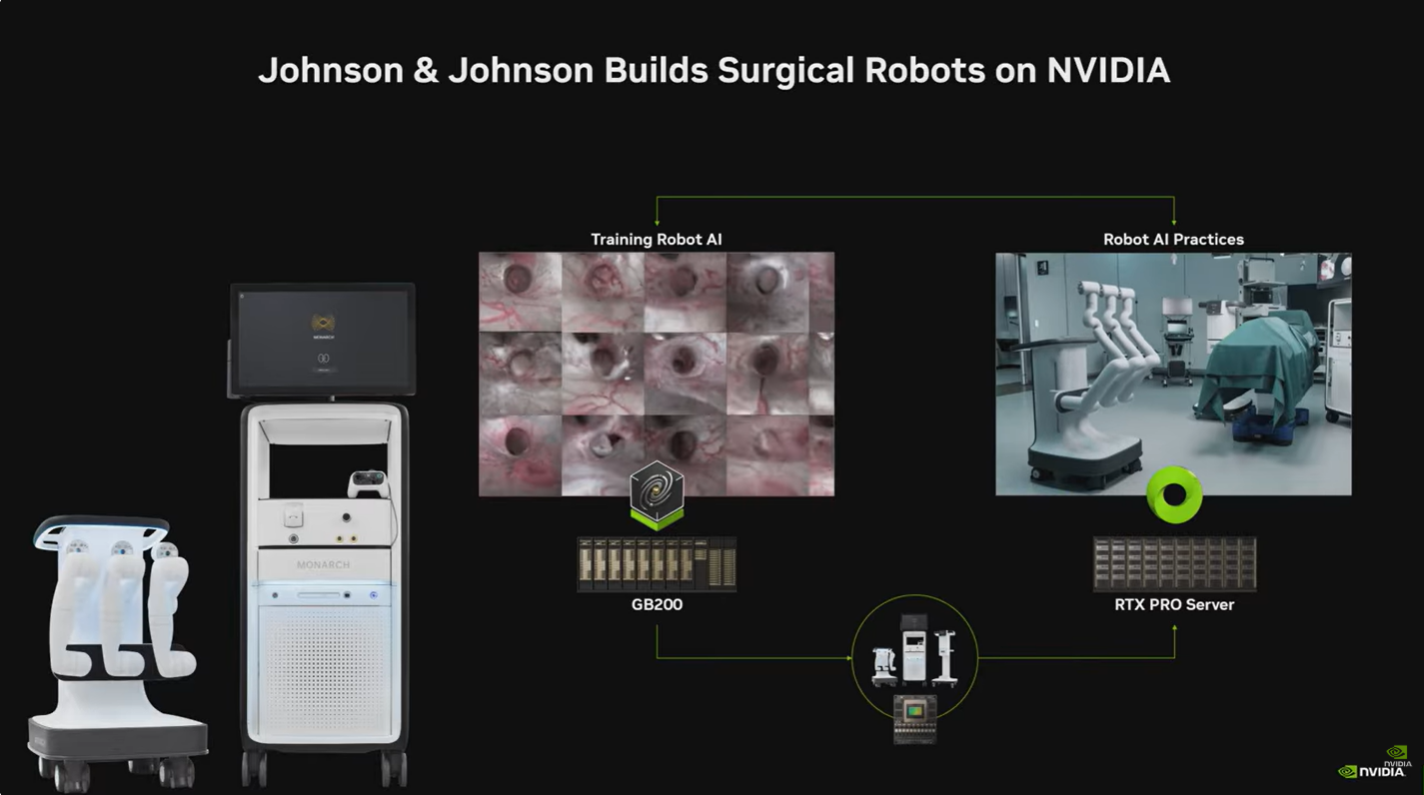

- Healthcare: J&J also uses NVIDIA infrastructure to train surgical robots and practice robotic surgery

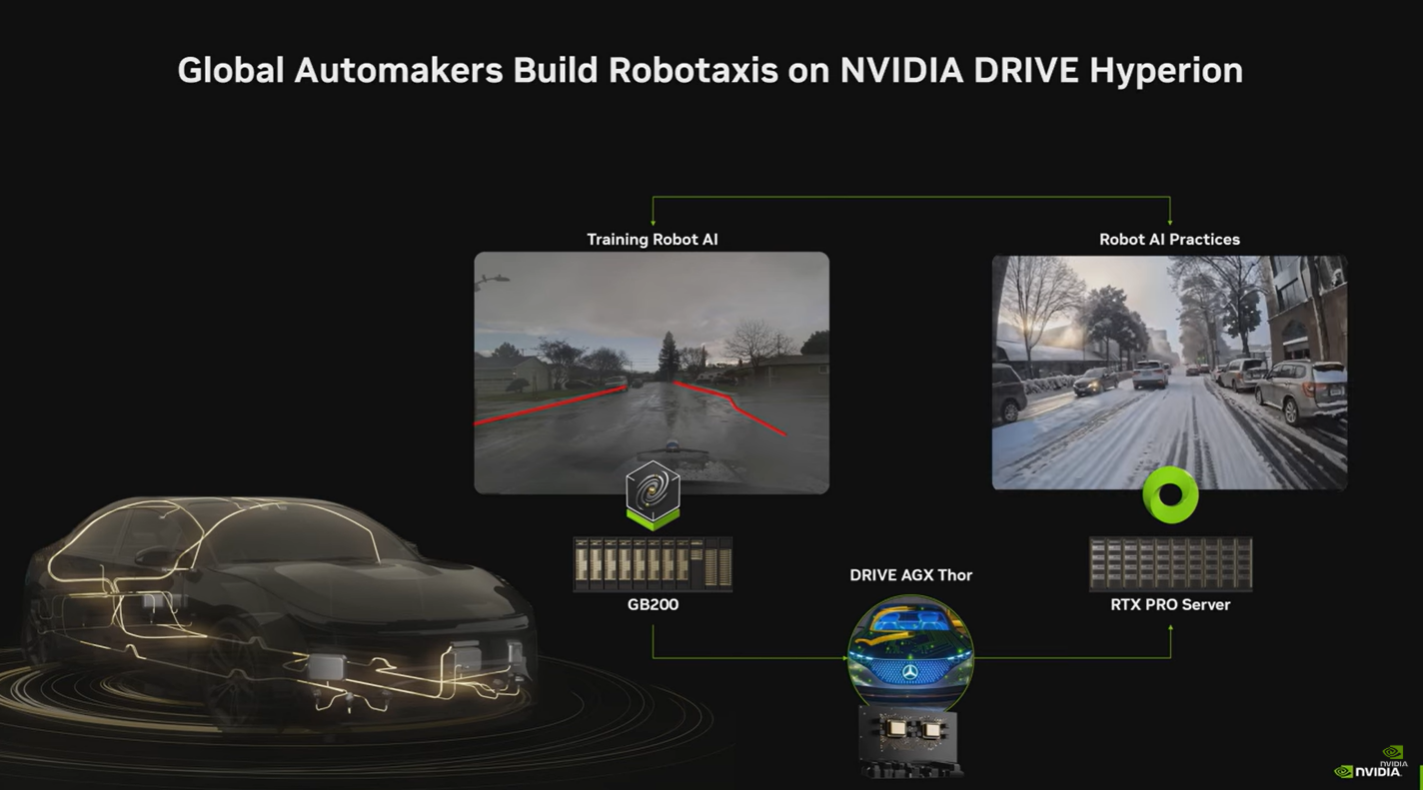

- Autonomous Driving: Autonomous driving developers train on NVIDIA’s platforms

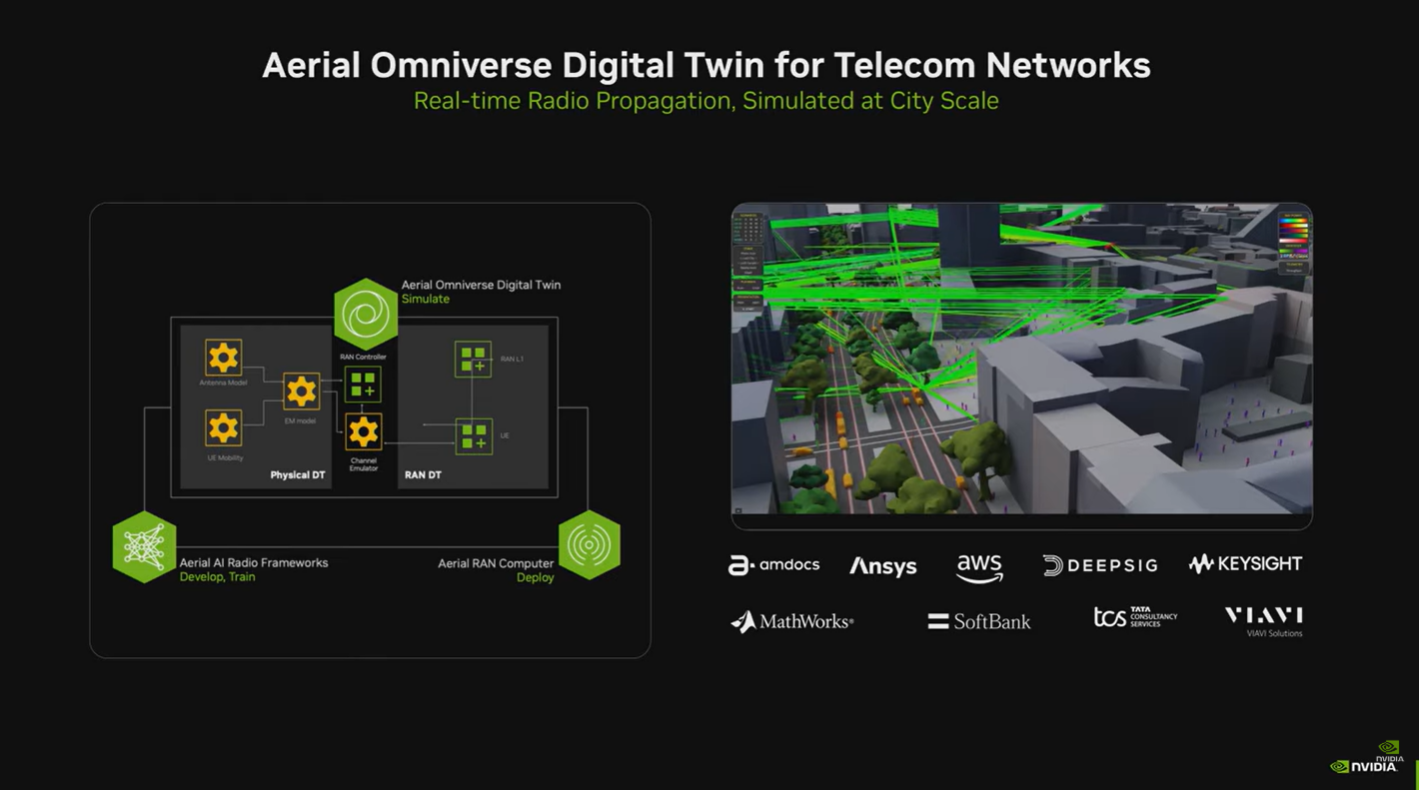

- 6G cell tower example: Digital twin city base used for beam forming

NVIDIA does not create models or applications on its own; it only provides compute. Emphasized that it is an ecosystem of partners