4 predictions from Shane Legg, Google Deepmind Co-founder

Including the 2028 prediction that hasn't changed in 15 years

Why attention on Shane Legg?

The AI ‘gurus’ such as Yann LeCunn, Andrej Karpathy and Ilya Sutskever (not to mention Sam Altman) have all made frequent appearance in public, detailing their views on the state of AI. Recently, Shane Legg, an OG AI guru in his own right, did an interview with Professor Hannah Fry, which I thought deserved attention.

Shane Legg co-founded DeepMind Technologies in 2010 with Demis Hassabis and Mustafa Suleyman. After being acquired by Google in 2014, DeepMind has created cutting edge AI models such as AlphaGo and AlphaFold. AlphaFold also won Demis Hassabis and John Jumper the Nobel prize in chemistry for improving prediction accuracy in protein structure. More notable is Shane Legg is credited with coining the term “AGI” - Artificial General Intelligence - a term people are increasingly paying attention to.

Some of his predictions are continuously repeated by other key figures in the field today. Repetition doesn't make an argument true, but when multiple experts converge on similar predictions, it's worth paying attention.

Shane Legg’s 4 predictions

- There may be a ‘minimal AGI’ by 2028

- Reaching full AGI needs better algorithm and architecture, not just scale

- AI will surpass human, whether people believe it to be conscious or not

- AI might be actually ethical & safe

There may be a ‘minimal AGI’ by 2028

Legg differentiates between a ‘minimal’ AGI, a ‘full’ AGI, and Artificial Super Intelligence (ASI)

Minimal AGI: AI capable of completing cognitive tasks that a ‘typical’ person can do.

Full AGI: AI capable of doing things that only extraordinary people can do (such as Einstein, Mozart…etc)

ASI: AI capable of surpassing anything that human can do.

I’ll admit, I’d been using ‘AGI’ as if it was a single finish line. Legg’s breakdown into minimal/full/ASI reframes the whole conversation—and makes 2028 feel less like sci-fi.

He made the prediction that the world may see a minimal AGI by 2028 since 2011. It is incredible to see how his prediction has not changed much over nearly 15 years. In his own words from 2012:

I give it a log-normal distribution with a mean of 2028 and a mode of 2025, under the assumption that nothing crazy happens like a nuclear war.

We’ve seen sparks of AGI progress, but it’s wildly uneven. LLMs today can fluently discuss quantum physics in 150 languages but completely fail at counting the letter ‘r’ in ‘strawberry.’

Reaching full AGI needs better algorithm and architecture, not just scale

Legg points out the current challenges that AI experience cannot be solved by simply increasing scale. LLMs today do not have ‘episodic memory’ like humans do. And after training, AI does not have any more ‘new memories’ that it acquires like humans do in everyday life - think of claude or ChatGPT that have forgotten what it did the day before or in a different session.

An episodic memory system will store new information then slowly teaches it back to the main model. There are a lot of research ongoing such as SEAL from MIT, but there does not seem to be a complete success just yet.

The memory problem is about making it learn from its interaction with the users. Right now, every conversation with Claude or ChatGPT is essentially a fresh start. The model has its training data, but it doesn’t accumulate experience the way you or I do. We’re trying to solve this with bigger context windows (so it can ‘see’ more of the conversation) and RAG (so it can pull in external information), but neither is true learning.

AI will surpass human, whether people believe it to be conscious or not

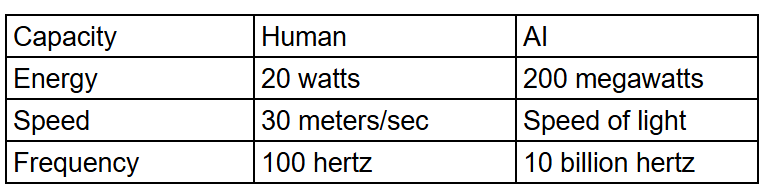

AI surpassing human capabilities is a simple matter of biological vs synthetic hardware, though people will continue to differ on whether AI has a true ‘consciousness’ as there are many conflicting definition of what a true ‘consciousness’ is.

Up until now, humans have been the more ‘efficient’ hardware of the two, as we spend less energy and require less throughput to carry out highly complex, abstract reasoning. But efficiency aside, data centers with its sheer, brute force may be enough to do better than us.

AI might be actually ethical & safe

Legg thinks AI’s ability to better conduct ‘System 2’ thinking. As we are seeing more and more CoT (Chain-of-Thought) applied in AI, the models’ reasoning more and more resembles that of humans. His opinion is that we can audit the System 2 thinking and validate the ethical reasoning to make sure that AI is safe.

He does not really delve much deeper into this part though. Legg’s optimism about AI ethics might hinge on this same memory problem. If AI can continuously learn and update its reasoning—not just apply frozen ethical guidelines from training—maybe it can handle novel ethical situations the way humans do. But that’s a big ‘if.’

Where I’m landing with this:

Whether we’re talking about reaching AGI or making AI actually ethical, the models need continuous learning—constantly updating their understanding based on new information, just like humans do. Right now, AI is stuck in a weird limbo. It’s incredibly smart but also frozen in time, unable to truly learn from interactions without retraining from scratch.

The current workarounds are either (1) massively expanding context windows so the AI can “remember” more of a conversation, or (2) using RAG (Retrieval-Augmented Generation) to pull in relevant information on demand. Both are clever Band-Aids, but they’re not really learning—they’re just better at finding and using information.

I keep wondering: what else is out there? If episodic memory is the missing piece for both AGI capability and ethical reasoning, and context windows + RAG aren’t cutting it, what’s the third option? That feels like the question worth watching over the next few years.

And if Legg’s 2028 timeline holds, we’ve got maybe 3-4 years to figure it out. What do you think? Let me know in the comment section or reach out to me. Let’s talk.